A new study uses headcams and face decoding technology to help parents understand and communicate with teenagers.

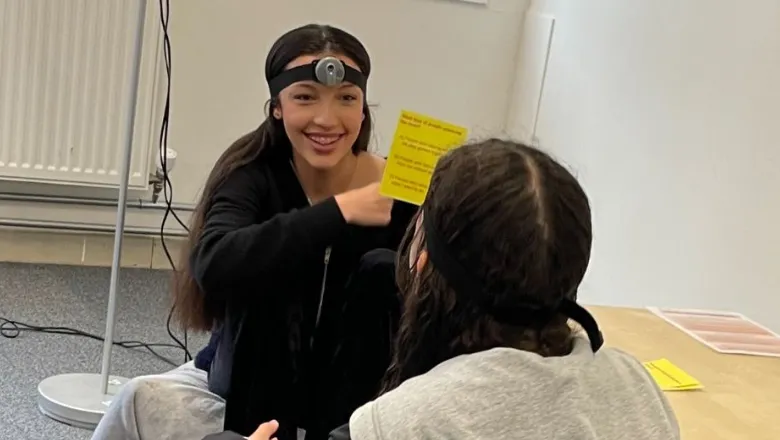

As part of a joint study between King's College London and Manchester Met, wearable headcams worn in real interactions and face decoding technology were used to read teens' facial expressions, potentially uncovering hidden feelings and insights into relationships.

Recordings of the adolescents' facial expressions were run through new AI software to detect and understand intricate details of emotions across minute time scales.

The project is outlined in a paper published in the journal Frontiers and shows the technology is helping psychologists work with teens and their parents to foster better mutual understanding and communication. For example, the algorithms can pinpoint that someone is 20% worried or 5% happy and could therefore identify when teenagers are masking their true feelings.

With the use of artificial intelligence, human judgements can be predicted based on the software's readings - meaning computers can provide unique information on the timing and mixed presentation of emotions.

The protocols could soon be used in therapy sessions, helping to reduce mental health problems by promoting understanding and positive parent-adolescent interactions.

The project was funded by the European Research Council (ERC) and involved 110 families. It explored how well computers could capture authentic human emotions in youths aged 14-16 and their parents during everyday interactions. They captured their expressions on footage recorded from headcams worn during card game sessions designed to elicit emotional responses.

The protocol for using the cams at home and process of 'coding' teen and parents' emotions was co-designed in interactive workshops using theatre and immersive film and a mobile research van with young people and communities.

Mental health is a rapidly growing issue for young people, with one in five young people identified as having a mental health problem in 2022, rising from 12% in 2017 to 20% in 2023.

'With the current mental health crisis in adolescence it is crucial we understand potential sources of resilience for young people. Human interaction is highly complex and multi-faceted. Our facial expressions serve as critical non-verbal social cues, communicating our emotions, intentions and support our social intentions. But a huge amount of individual variability exists in our expressions, much of which is outside our conscious control. Understanding how these variations are understood and responded to by parents may provide critical information to support relationships.'

Dr Nicky Wright, Psychology Lecturer at Manchester Met and lead researcher

'One of the most exciting things about this project is the potential to use headcam footage in family therapy sessions. For example, families could be asked to film themselves doing a task, either at home or in the session. The therapist could then review the task with the family, picking out positive moments in the interaction.'

Dr Tom Jewell, Lecturer in Mental Health Nursing at King's College London and senior author of the paper

The research team plan to explore the use of automated facial coding as a tool for families and those who support them to improve communication and relationships between parents and teenagers, and better understand mood and mental health disorders and interactions.

Read the full study, Through each other's eyes: initial results and protocol for the co-design of an observational measure of adolescent-parent interaction using first-person perspective on the Frontiers website.