Synopsis

As single core processors become obsolete, the aviation industry is turning to multi-core processors to replace them, with the additional advantage of exploiting improved system capabilities. However, the lack of deterministic and predictable behaviour in unmitigated multi-core processors provides difficulty in their assurance, which is critical for applications which could lead to loss of aircraft. This essay provides an introduction to the challenges of certification for Multi-Core Processors in safety critical aircraft systems.

The MAA is receiving increasing enquiries about incorporation of Multi-Core Processors (MCPs) into aircraft design through the Military Air Systems Certification Process (MACP). This article is not a substitute for regulation or guidance material but serves to illustrate some of the challenges relating to MCP use in safety critical aircraft systems and their potential implications for future projects.

What are Multi-Core Processors?

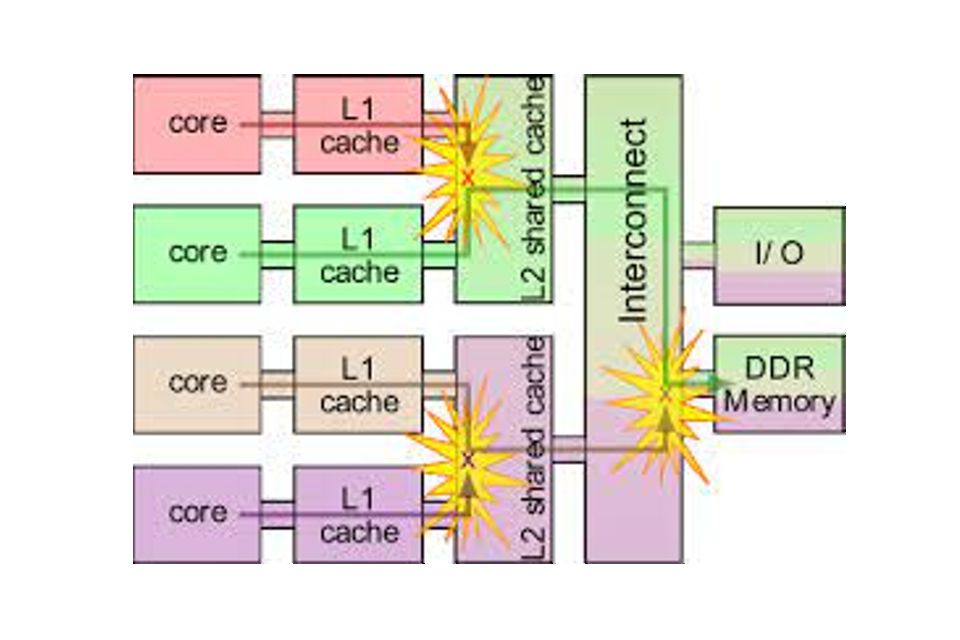

Multi-Core Processors are single computing components, but with 2+ separate processing units, also known as 'cores'. A MCP can run instructions on multiple cores concurrently to increase speed and capability. MCPs generally use a tiered storage system, using different sizes of shared bus, cache, memory controllers and memory devices, enabling more frequently used information to be readily and quickly accessible in smaller storage elements, and allowing seldom used to be held in a larger, slower storage. There are several ways that storage elements can be connected and communicate with each other and the cores, and the design of this is central to a MCP design for a particular application.

However, the strength of MCPs in speed and capability can also be their weakness, with contention, or interference, between the interconnected computing components having the potential to disrupt the application, meaning functions take too long to execute or can be processed asynchronously. This can be compared to when an app takes a long time to load on your mobile phone or computer and you need to restart the app and / or device; or getting the answers to a series of questions out of sequence. In an aviation context, a lower threshold example would be a delay in a mode selection on a cockpit screen. At the other extreme, this could be a flight control stability system that would usually expect an immediate response where a lag could have catastrophic consequences.

Why Multi-Core Processors in the Defence Aviation Environment?

MCPs offer a reduced size and weight, whilst increasing processing power in the systems they are used. Meantime, traditional single core processors are becoming obsolete, driving a move towards multi-core processor use. Theoretically, a MCP can be used as a direct replacement for older single core processors, by locking the MCP to only use one core. Equally, they can be used to enable new technologies requiring greater and quicker processing capability, whilst reducing the chip count for the system. However, MCPs can lack predictability, unless they are forced by design or operating system (OS) to emulate a single core processor, making them a particular challenge to certify for safety critical applications. As of 2022, the first regulatory Acceptable Means of Compliance (AMC) for MCP has been released in European Union Aviation Safety Agency (EASA) AMC 20-193 and there is a clear roadmap to certification. No UK military MCP air system certification of a safety critical application has yet been completed to current industry standards, due to the novelty and demand of the challenge; similarly, there are few civil certified systems to the previous CAST-32A guidance [footnote 1].

Why are MCPs a challenge to certify in safety critical applications?

Current methods of software and hardware certification assurance hold principles of determinism and predictability at their heart. By comparison, MCPs, because of the dynamic way they allocate tasks to cores have inherent unpredictability, are complex and may be non-deterministic. This demands a deeper level of measurement and analysis when generating certification evidence.

MCP structure contain numerous interference channels, both visible and hidden. This means that two independent processors, concurrently communicating with the storage system, compete for access to achieve their task. If you imagine congestion on a motorway narrowing from 3 lanes to 2, this gives an idea that the impact of the interference channel could be minor in light traffic, or quite severe in heavy traffic, perhaps requiring a diversion from the motorway along an alternate route - giving rise to the uncertainty and non-deterministic behaviour. Some days, this may add 1 minute to your journey and be barely noticeable, whereas other days it could add 3 hours to your journey, causing you to miss an important appointment. It will never be possible to remove these interference channels, but clearly understanding and minimising their performance impact in the context of the system it serves is important to support an operational output, most specifically for safety critical functions. This could be achieved both through designed-in barriers (throttled / constrained chips) or mitigations (Operating System).

Interferences Due to Concurrent Access on Shared Resources. Federal Aviation Authority.

Worst Case Execution Time (WCET) and high-water mark tests are run to analyse the time tasks take to perform, but due to the complexity of the MCP infrastructure, the actual value may never be discovered despite running the tests repeatedly. As a result, despite these being quantitative demonstrations, they will only ever feed a qualitative assessment. There will come a point where an engineering risk factor will need to be applied and justified based on the extent of testing, perceived risk to function and the operator's tolerance to that risk. Interference caused by applications running on MCP without mitigation usually generate a higher WCET, compared to those with constraints applied, such as temporal and spatial partitioning.

WCET is the longest possible execution time for a piece of code on a specific hardware platform, while high-water mark refers to the longest time path observed on one individual path. WCET is often the more pessimistic value and can be run in smaller blocks to enable increased analysis.

Testing and analysis can highlight incidences where the WCET is beyond a tolerable outcome, allowing various mitigations either by elimination through design or to minimise the effects. Mitigations are wide ranging and situation and infrastructure dependent, but can include robust time partitioning, and isolating caches and channels for sole use by one core. Any mitigation leads to a trade-off between performance, predictability, analysability, and complexity, which may be necessary to provide certification assurance of MCP applications where determinism is required. For single active core, this should not be an issue since this is generally an obsolescence solution; for new applications, it is important to select a chip developed specifically for aviation applications to reduce performance impacts due to interference effects.

Design Assurance Level (DAL) Allocation to Failure Condition

MCP assurance is structured using development rigour. Hazards and associated failure conditions are assessed as part of an aircraft design, including all Type Design Changes (see RA 5810 and RA 5820). The failure conditions are categorised depending on the potential effect on the aircraft, crew, passengers and third parties and range from 'Catastrophic' in the worst case, to 'No Safety Effect' in the best case. Functional Design Assurance Levels (FDAL) are derived from failure condition severity and correspond to the level of rigour required in the function development.

Functions at system level are implemented by 'Items' (hardware or software) as defined by ARP4754A [footnote 2], which in turn are allocated Item Development Assurance Levels (IDAL). For software items, the IDAL indicates the level of assurance that the software design needs to be developed based on the failure condition, with a range of IDAL A - E. The MAA would expect IDAL A, as the highest IDAL, to be attributed against failure conditions where failure or malfunction of software could contribute to a catastrophic outcome. Each IDAL is assured through compliance with sets of defined objectives, with higher IDALs attracting a greater number of objectives to satisfy, including the requirement for greater levels of independence. In same way, for hardware items the required objectives are derived from the assigned IDAL.

When assigning DALs to failure conditions, proof of functional independence can be used to reduce the DAL. If assigning DALs to lower-level functions within an architecture, it is possible to assign lower DALs to multiple independent functions that fulfil the higher-level functions. In software terms, there cannot be any dependence between the lower-level functions that could cause both functions to fail to fulfil the higher-level function concurrently. This includes use of identical software code between independent functions.