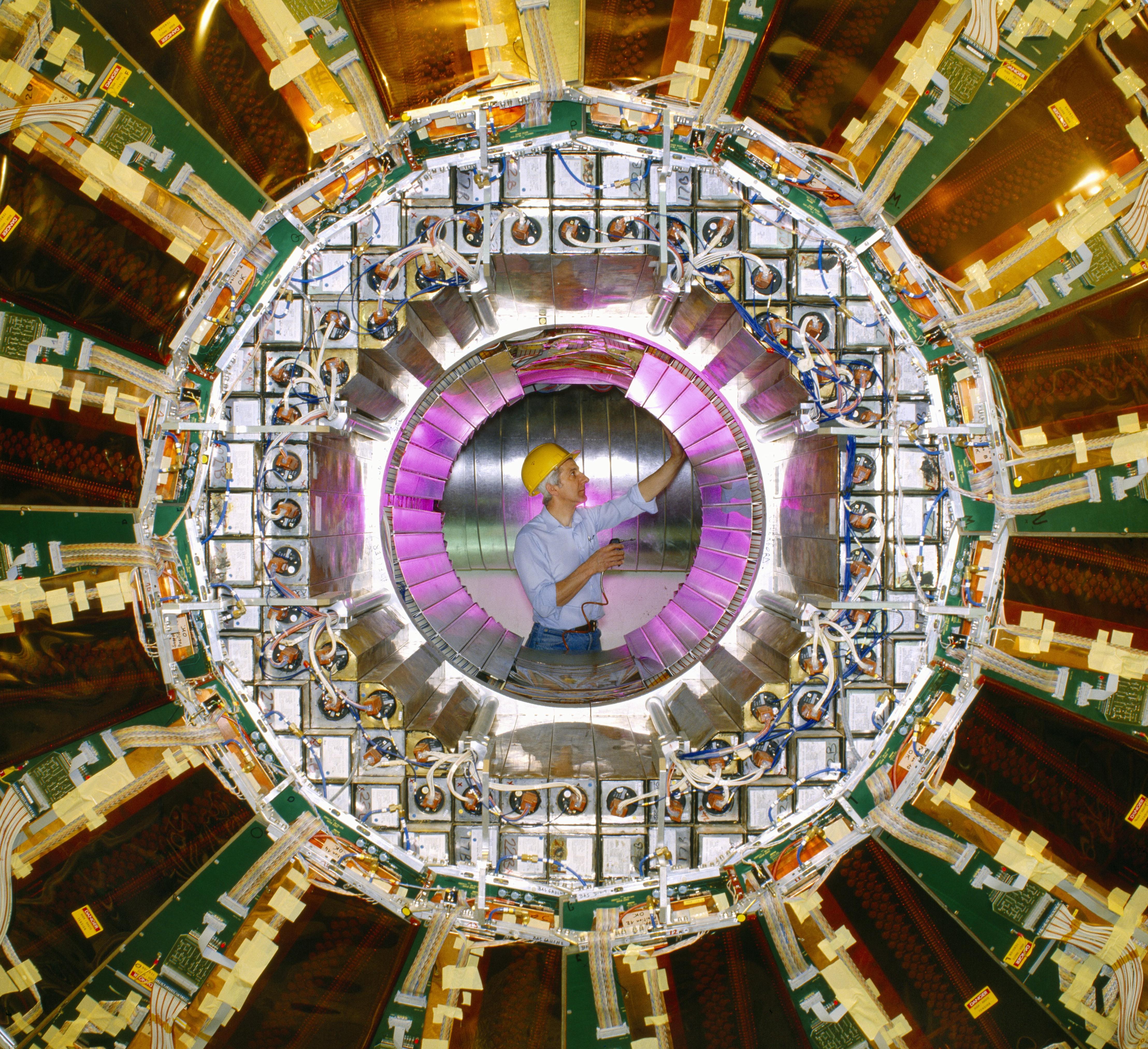

A technician working on the OPAL detector, one of the four particle detectors of the LEP collider. This photo was taken in 1989 and the LEP began running later that year. Analysis of the data continued long after the LEP was shut down in 2000. (Image: David Parker/Science Photo Library)

About a billion pairs of particles collide every second within the Large Hadron Collider (LHC). With them, a petabyte of collision data floods the detectors and pours through highly selective filters, known as trigger systems. Less than 0.001% of the data survives the process and reaches the CERN Data Centre, to be copied onto long-term tape. This archive now represents the largest scientific data set ever assembled. Yet, there may be more science in it than we can extract today, which makes data preservation essential for future physicists.

The last supernova explosion observed in the Milky Way dates back to 9 October 1604. How much more could we learn if, alongside the notes made by German astronomer Johannes Kepler at the time, we could see what he saw with our own eyes? Our ability to extract information from laboratory data relies on current computational capabilities, analysis techniques and theoretical frameworks. New findings may lie waiting, buried in some database, and the potential for future discoveries hinges on preserving the results we gather today.

For data to stand the test of time, it must be archived, duplicated, safeguarded and translated into modern formats before we lose the expertise and technology to read and interpret it. As outlined in the recent "Best-practice recommendations for data preservation and open science in high-energy physics" issued by the International Committee for Future Accelerators (ICFA), preservation efforts require planning and clear policy guidelines, as well as a stable flow of resources and continued scientific supervision. The Data Preservation in High-Energy Physics (DPHEP) group, established in 2014 under the auspices of ICFA and with strong support from CERN, estimates that devoting less than 1% of a facility's construction budget to data preservation could increase the scientific output by more than 10%.

In the latest issue of the CERN Courier, Cristinel Diaconu and Ulrich Schwickerath recall some of the most remarkable treasures unearthed from past experiments - such as the Large Electron-Positron Collider (LEP), whose data remains relevant for future electron-positron colliders twenty-five years on, and HERA, which still informs studies of the strong interaction almost two decades after its shutdown.

Diaconu and Schwickerath advocate a joint commitment to international cooperation and open data as the way to maximise the benefits of fundamental research, in compliance with the FAIR principles of findability, accessibility, interoperability and reusability. With the High-Luminosity LHC upgrade on the horizon, data preservation will play an important role in making the most of its massive data stream.