Lack of incentives and low adoption of metadata standards are limiting AI's potential for bioimage analysis - a community initiative proposes solutions

AI can spot subtle patterns in millions of microscopy images or compare a patient scan against thousands of others in seconds. Yet, several technical and cultural hurdles, touching on metadata, incentives, formats and accessibility, are standing in the way.

BioImage Archive Team Leader Matthew Hartley (MH) and Bioinformatician Teresa Zulueta Coarasa (TZC) explain how community-driven recommendations could help.

What is metadata, and why is it important in bioimaging right now?

MH: Metadata is essentially the context around images and annotations. It explains what we are seeing and how the image was captioned - when, where, and under what conditions.

For AI training, metadata is what makes a dataset interpretable, reusable, and valuable beyond the lab that generated it. The challenge is that different labs record metadata in different ways, making it hard for others to reuse their data. Agreeing on standards helps everyone speak the same language.

Your paper proposes guidelines to improve the reuse of bioimages for AI applications. How did you develop the guidelines?

MH: The idea came out of a workshop we organised in 2023 as part of the AI4Life project , which had 45 participants from across the community, including data producers, AI scientists, and bioimage analysts. We identified four groups of recommendations, summarised as MIFA, which stands for Metadata, Incentives, Formats, and Accessibility. The paper describing our recommendations has been published in Nature Methods .

How can we improve the reuse of bioimages for AI applications?

TZC: For metadata, we propose a new standard that focuses on image annotations, building on metadata standards like REMBI , which we developed in 2021. This is important because annotations, such as segmentation masks, are an essential part of these datasets, and scientists need to understand what they are and how they were generated.

"We recognise that it takes a lot of time and effort to produce annotated datasets."

"We recognise that it takes a lot of time and effort to produce annotated datasets."

In terms of incentives, we recognise that it takes a lot of time and effort to produce annotated datasets. Right now, there are few incentives to encourage labs to produce metadata or to share their images in open repositories such as the BioImage Archive. This needs to change, and it will require funders, journals, data archives and the bioimaging community to work together on strong incentives.

Microscopy equipment uses a range of formats, depending on the manufacturer. We need to ensure common, interoperable data formats so labs can share and reuse images easily.

These aren't just abstract ideals; they're practical recommendations developed with input from the people producing the data and those who need it for AI training.

What impact could widespread adoption of MIFA have?

TZC: Life scientists spend months generating beautiful, painstakingly annotated datasets, which AI developers often struggle to interpret. Bringing both sides together could help bridge this gap. With standardised metadata, AI models trained on one dataset could be validated on others, improving reproducibility. It would unlock the ability to compare models, replicate results, and accelerate discovery. In short, it makes bioimaging AI scalable.

"We really believe that if data producers adapt the guidelines, we'll have a virtuous cycle: better datasets, better AI, and better science."

"We really believe that if data producers adapt the guidelines, we'll have a virtuous cycle: better datasets, better AI, and better science."

MH: There's real momentum in the community to make this happen. For example, journals are recommending that datasets should be deposited in public archives. This in itself creates an incentive for researchers to structure and share their data more thoughtfully. We really believe that if data producers adopt the guidelines, we'll have a virtuous cycle: better datasets, better AI, and better science. A good first step is to read our recommendations and see if you can integrate them into your workflows.

Voices from the community

Below are a couple of comments from colleagues who have successfully used the MIFA guidelines in their work.

"Thanks to the MIFA guidelines and the BioImage Archive, I could easily locate appropriate new datasets for a project that investigated the transferability and suitable selection of pre-trained image segmentation models. Access to well-structured metadata made working with multiple datasets for training and evaluating neural networks much simpler and time-efficient. Our results are available here ." - Joshua Talks, EMBL PhD student

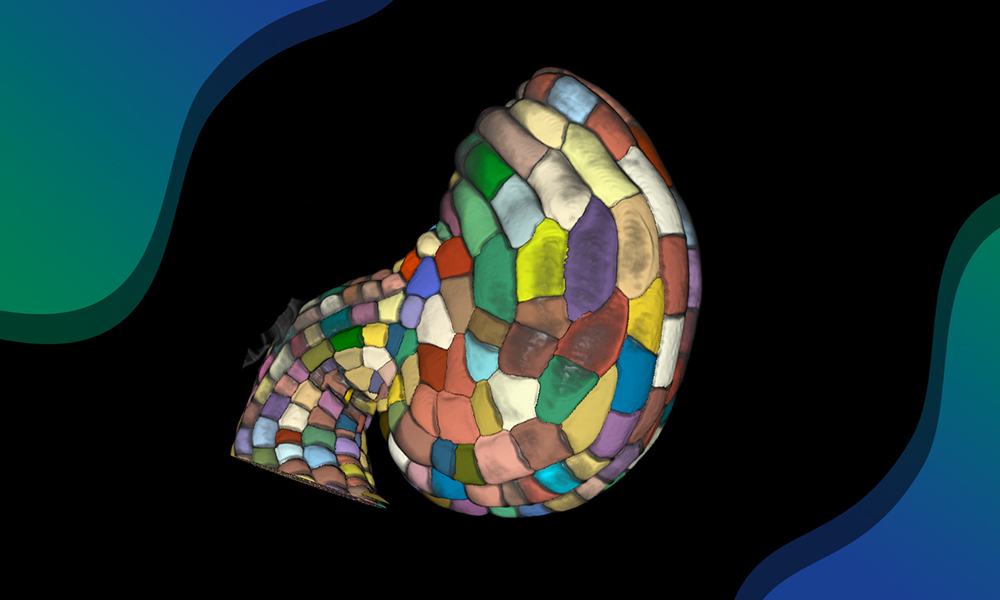

"We hope that, by sharing our images and annotations according to the MIFA guidelines, we will maximise the reusability of our datasets for training novel AI tools, and increase the visibility of the AI tool that we trained using those datasets. Our ReSCU-Nets are recurrent neural networks that integrate segmentation and tracking of cellular, subcellular, and supracellular structures from multidimensional confocal microscopy sequences." - Rodrigo Fernandez-Gonzalez, Professor at the University of Toronto