For years, all of us have relied on a certain level of artificial intelligence. Streaming services and social media develop algorithms to suggest content related to what you already like. Businesses use AI to analyze data, predict patterns or automate routine processes. Manufacturers program robots to do the repetitive tasks needed to create cars and other products.

The rise of generative AI, which can create new content, has accelerated both business investments and greater interest for society at large. Rather than just sorting preexisting information, OpenAI's ChatGPT, Google's DeepMind and other contenders can generate new text, images and video based on written prompts.

Researchers at the School of Systems Science and Industrial Engineering are examining AI from a variety of angles - the best ways to implement it, what we're getting out of it and how to improve it.

A new landscape for this 'boom'

Carlos Gershenson-Garcia, a SUNY Empire Innovation Professor, has studied AI, artificial life and complex systems for the past two decades.

When surveying the current "AI boom," he steps back for a moment and offers some historical perspective: "There always has been this tendency to think that breakthroughs are closer than they really are. People get disappointed and research funding stops, then it takes a decade to start up again. That creates what are called 'AI winters.'"

He points to frustrations with machine translation and early artificial neural networks in the 1960s, and the failure of so-called "expert systems" - meant to emulate the decision-making ability of human experts - to deliver on promised advances in the 1990s.

"The big difference is that today the largest companies are IT companies, when in the '60s and '90s they were oil companies or banks, and then car companies. All of it was still industrial," he says. "Today, all the richest companies are processing information."

With breakthroughs in large language models such as ChatGPT, some futurists have speculated that AI can do the work of secretaries or law clerks, but Gershenson-Garcia sees that prediction as premature.

"In some cases, because this technology will simplify processes, you will be able to do the same thing with fewer people assisted by computers," he says. "There will be very few cases where you will be able to take the humans out of the loop. There will be many more cases where you cannot get rid of any humans in the loop."

'More noise and detail'

Assistant Professor Stephanie Tulk Jesso researches human/AI interaction and more general ideas of human-centered design - in short, asking people what they want from a product, rather than just forcing them to use something unsuitable for the task.

"I've never seen any successful approaches to incorporating AI to make any work better for anyone ever," she says. "Granted, I haven't seen everything under the sun - but in my own experience, AI just means having to dig through more noise and detail. It's not adding anything of real value."

Tulk Jesso believes there are many problems with greater reliance on AI in the workplace. One is that many tech experts are overselling - AI should be a tool, rather than a replacement for human employees. Another is how it's often designed without understanding the job it's meant to do, making it harder for employees rather than easier.

Lawsuits about copyrighted materials "scraped" and repurposed from the internet remain unresolved, and environmentalists have climate concerns about how much energy generative AI requires to run. Among the ethical concerns are "digital sweatshops" in developing countries where workers train AI models while enduring harsh conditions and low pay.

Tulk Jesso also sees AI as too unreliable for important tasks. In 2024, for instance, Google's AI suggested adding glue to pizza to help the cheese stick better, as well as eating a small rock daily as part of a healthy diet.

Fundamentally, she says, we just don't know enough about AI and how it works: "Steel is a design material. We test steel in a laboratory. We know the tensile strength and all kinds of details about that material. AI should be the same thing, but if we're putting it into something based on a lot of assumptions, we're not setting ourselves up for great success."

Working alongside robots

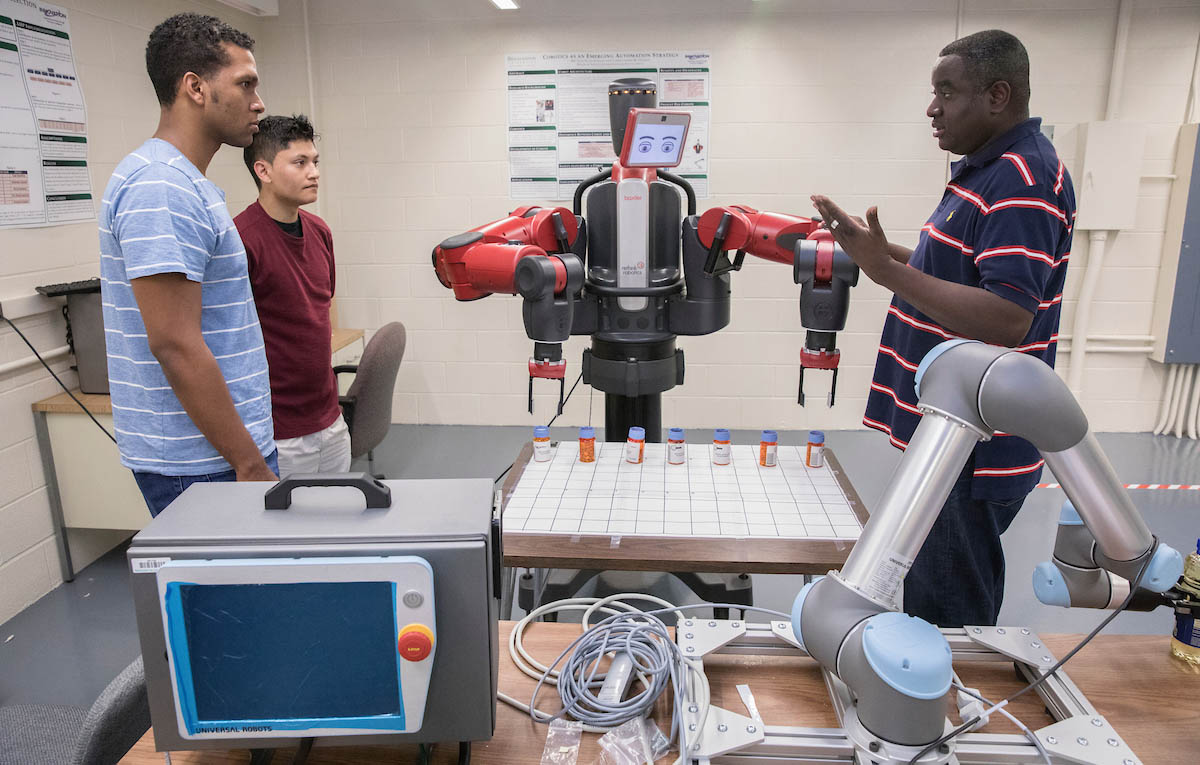

As the manufacturing sector is upgrading to Industry 4.0 - which utilizes advances such as artificial intelligence, smart systems, virtualization, machine learning and the internet of things - Associate Professor Christopher Greene researches collaborative robotics, or "cobots," as part of his larger goal of continual process improvement.

"In layman's terms," he says, "it's about trying to make everybody's life easier."

Most automated robots on assembly lines are programmed to perform just a few repetitive tasks and lack sensors for working side by side with humans. Some functions require pressure pads or light curtains for limited interactivity, but those are added separately.

Greene has led projects for factories that make electronic modules using surface-mount technology, as well as done research for automated pharmacies that sort and ship medications for patients who fill their prescriptions by mail. He also works on cloud robotics, which allows users to control robots from anywhere in the world.

Human workers are prone to human errors, but robots can perform tasks thousands of times in the exact same way, such as gluing a piece onto a product with the precise amount of pressure required to make it stick firmly without breaking it. They also can be more accurate when it matters most. Humans will be required to program and maintain the automated equipment.

"Assembling pill vials with the right quantities is done in an automated factory," Greene says. "Cobots are separating the pills, they're putting them in bottles, they're attaching labels and putting the caps on them. They're putting it into whatever packaging there is, and it's going straight to the mail. All these steps have to be correct, or people die. A human being can get distracted, pick up the wrong pill vial or put it in the wrong package. If you correctly program a cobot to pick up that pill bottle, scan it and put it in a package, that cobot will never make a mistake."

Keeping AI unbiased

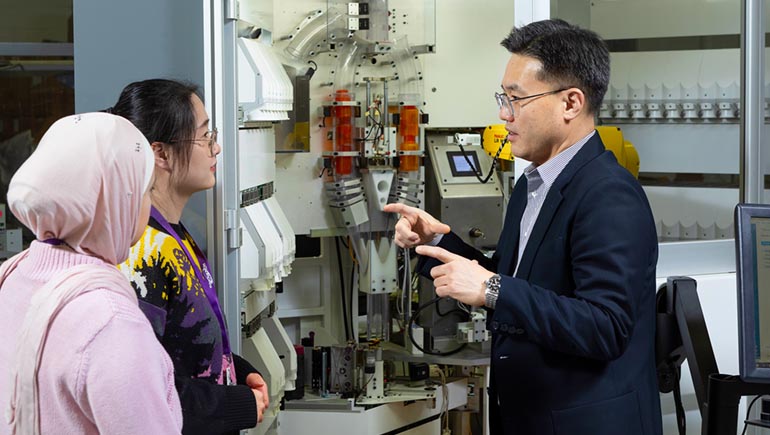

Associate Professor Daehan Won's AI research focuses on manufacturing and healthcare, but the goal for both is the same: Use it as a tool for making better decisions.

On an assembly line, AI helps human operators avoid trial and error when making their products. In a medical office, it can analyze CT scans and MRIs so doctors can locate cancerous tumors more easily.

Before we let AI take more control in our everyday lives, though, Won believes there are fundamental limitations that we need to solve first. One of them is the "black box" nature of many data-driven AI systems, with the exact process for reaching conclusions often remaining a mystery.

"When AI answers a question in the healthcare area, doctors ask: How did it come up with this answer?" he says. "Without that kind of information, they cannot apply it to their patients' diagnoses."

Another problem is keeping AI unbiased. Even when thousands of data points are entered into a comprehensive algorithm, the results it offers are only as good as the information that humans provide to it. Even generative AI like ChatGPT or DeepAI can be tilted toward more affluent cultures that have better access to cell phones or internet connections.

"There is a ton of research about AI being used for image processing to detect breast cancer, but from our review, most of that research is from developed countries like the U.S., the U.K. and Germany," Won says.

As part of the New Educational and Research Alliance (newERA) between Binghamton University and historically Black colleges and universities (HBCUs), Won is working with Tuskegee University on a project trying to ensure that Blacks, Asians and other people of color are better represented in breast cancer studies.

The manufacturing sector is not free of bias either, he adds: "The same company can have a factory in Mexico and a factory in China. Can we apply the same systems or not? They have very similar manufacturing lines, but the machines are different and there are different levels of operator expertise. This is one of the big problems I'm trying to solve."

Humand needed for big decisions

Like other SSIE faculty members, Professor Sangwon Yoon sees AI as a useful tool, but he does not believe it should be the final word on any subject - at least not yet.

One issue is that many people have doubts. According to a 2024 survey from the online research group YouGov, 54% of Americans describe themselves as "cautious" about AI, while 49% say they are "concerned," 40% are "skeptical," 29% are "curious" and 22% are "scared."

From a research perspective, though, Yoon knows that AI can help solve complex problems much faster and easier than humans working on their own.

"We can apply it almost everywhere, because hardware systems get better and communication gets faster, so we can receive the data and use AI solutions," he says. "What can AI not do? That will be the more interesting question."

Yoon's AI research focuses on two areas - manufacturing and healthcare. In addition to improving closed-loop feedback control systems used on electronic circuit board assembly lines, he has studied better detection for breast cancer and strategies to curb hospital readmission rates.

However, medical professionals and patients are not willing to cede all decisions to AI. An algorithm does not decide on its own whether a cancer is malignant or benign, nor does it perform the surgery to remove it. Doctors still deliver the diagnoses and guide the final choices.

"We cannot 100% trust a human either, but at least we can communicate with doctors. We cannot talk to AI in the same way," he says. "It's the same with allowing AI to make military decisions. This is why AI solutions right now are mainly used for things like social media and entertainment, because if it's wrong, nobody gets harmed."

Beyond the 'right answer'

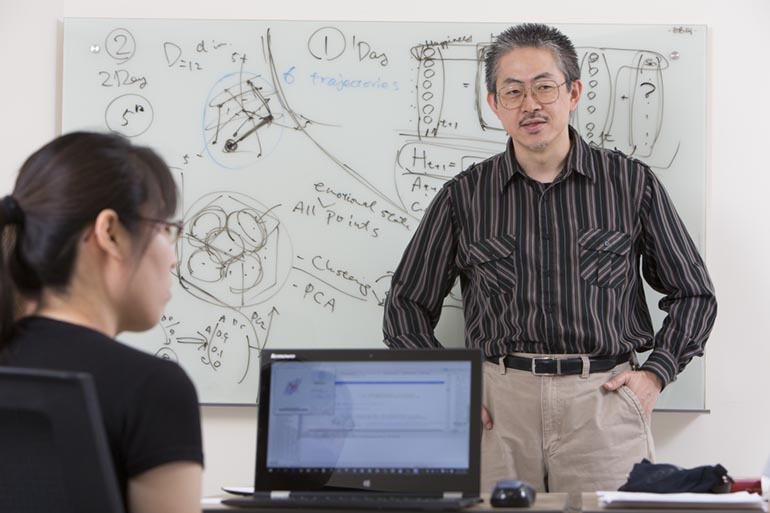

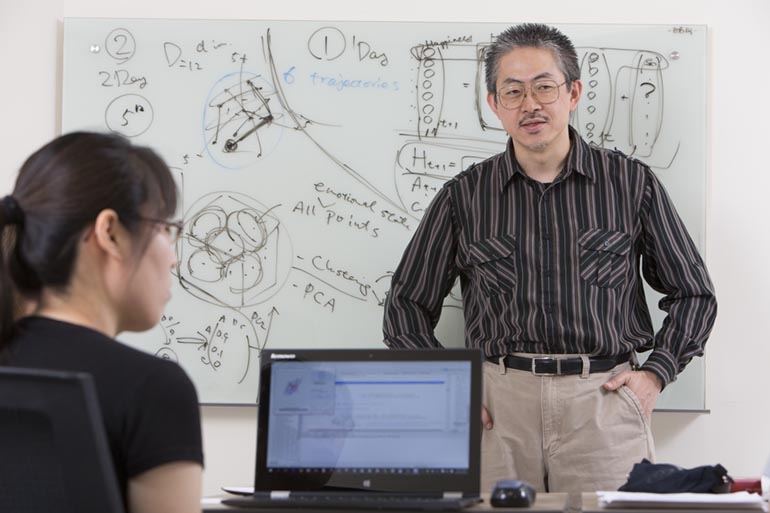

Distinguished Professor Hiroki Sayama's research isn't directly in artificial intelligence but rather in artificial life, a similar endeavor that attempts to create a lifelike system using computers and other engineered media. By trying to reproduce the essential properties of living systems, he hopes to better understand evolution, adaptive behavior and other characteristics.

One key difference he is delving into: Nearly all current AI and machine learning techniques are designed to converge on the best solution - the "right answer" - at the fastest speed.

"Real biological systems are not doing just that, but they also explore a broader set of options indefinitely without converging to a single option," says Sayama, who is vice president of the International Society for Artificial Life. "Similar things also may be said about human creativity and culture."

A new concept gaining attention in recent years is "open-endedness," which continues to generate novel solutions on its own with no fixed goals or objectives.

Overall, he is hopeful about what AI could achieve beyond writing essays and producing generative art based on text prompts. He believes AI could coordinate and mediate human discussions and decision-making processes, as well as making things more accessible. For instance, it could convert something visual to an auditory or tactile format, or it might generate simpler explanations for difficult concepts.

One thing that concerns him is the loss of diverse ideas when we rely too much on the current AI: "Since everyone is using the same small set of AI tools, the outputs are becoming more and more similar. This is yet another reason why we need open-endedness in the next generation of AI."