Abstract

A novel vision sensor, inspired by the neural transmission mechanisms of the human brain, has been developed to efficiently and accurately extract object outlines even under fluctuating lighting environments. This advancement promises to significantly enhance perception capabilities in autonomous vehicles, drones, and robotic systems, enabling faster and more precise recognition of surroundings.

A research team, led by Professor Moon Kee Choi from the Department of Materials Science and Engineering at UNIST, in collaboration with Dr. Changsoon Choi's team at the Korea Institute of Science and Technology (KIST) and Professor Dae-Hyeong Kim's team at Seoul National University, announced the development of this synapse-mimicking robotic vision sensor.

Vision sensors serve as the eyes of machines, capturing visual information that is transmitted to processors-analogous to the human brain-for analysis. However, unfiltered data transfer can lead to increased transmission loads, slower processing speeds, and reduced recognition accuracy, particularly in environments with rapidly changing lighting conditions or regions with mixed brightness levels.

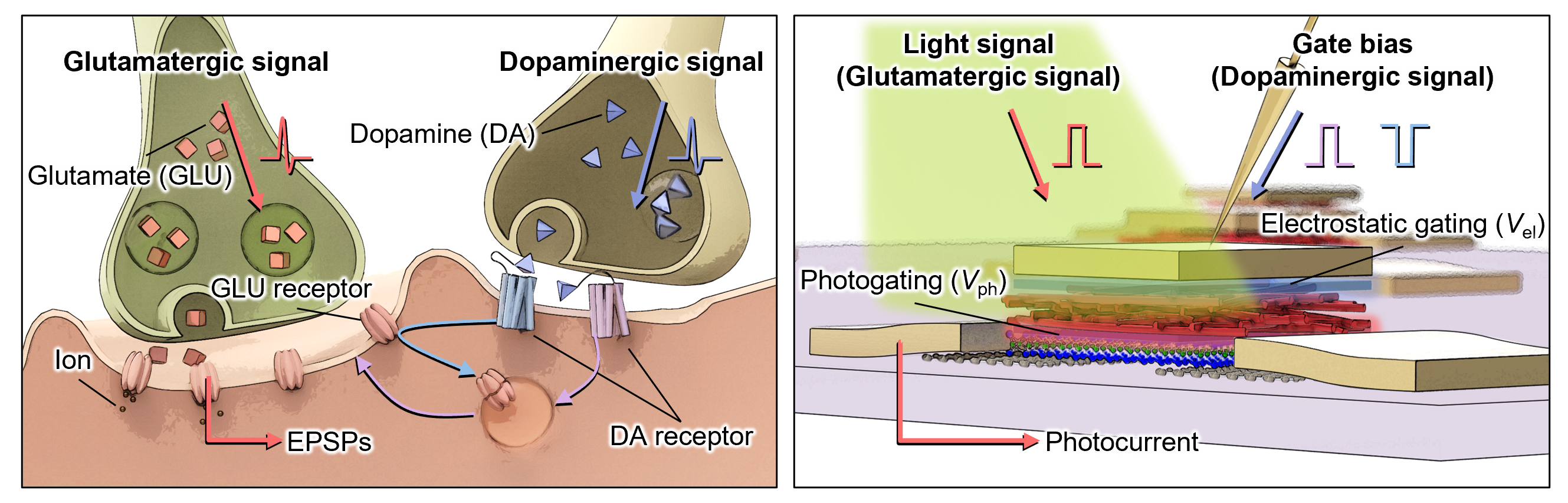

Figure 1. In-sensor multilevel image adjustment enabled by adjustable synaptic phototransistors. (A) Schematic illustration of biological synapses showing glutamatergic response for EPSP accumulation and its dopaminergic regulation. (B) Schematic illustration of adjustable synaptic phototransistors with light-induced photogating effect for photocurrent accumulation and gate bias-driven electrostatic gating effect for photocurrent regulation.

Figure 1. In-sensor multilevel image adjustment enabled by adjustable synaptic phototransistors. (A) Schematic illustration of biological synapses showing glutamatergic response for EPSP accumulation and its dopaminergic regulation. (B) Schematic illustration of adjustable synaptic phototransistors with light-induced photogating effect for photocurrent accumulation and gate bias-driven electrostatic gating effect for photocurrent regulation.

To address these challenges, the research team engineered a vision sensor that emulates the dopamine-glutamate signaling pathway found in brain synapses. In the human brain, dopamine modulates glutamate signals to prioritize critical information. Mimicking this process, the newly developed sensor selectively extracts high-contrast visual features, such as object outlines, while filtering out extraneous details.

Professor Moon Kee Choi explained, "By integrating in-sensor computing technology that mimics certain functions of the brain, our system autonomously adjusts brightness and contrast, effectively filtering out irrelevant data." She further added, "This approach fundamentally reduces the data processing burden for robotic vision systems that manage gigabits of visual information per second."

Experimental evaluations showed that the sensor could reduce data transmission volume by approximately 91.8%, while simultaneously improving object recognition accuracy to around 86.7%. The sensor incorporates a phototransistor whose current response varies with gate voltage, functioning similarly to dopamine by modulating reaction strength. This gate voltage control enables the sensor to adapt dynamically to varying lighting conditions, ensuring clear outline detection even in low-light environments. Furthermore, the sensor's output current responds to brightness differences between objects and backgrounds, amplifying high-contrast edges and suppressing uniform regions.

Dr. Changsoon Choi from KIST commented, "This technology has broad applicability across various vision-based systems, including robotics, autonomous vehicles, drones, and IoT devices. By simultaneously enhancing data processing speed and energy efficiency, it holds significant potential as a cornerstone for next-generation AI vision solutions."

This research was supported by the Young Researcher Program of the National Research Foundation of Korea, the Future Resource Research Program of KIST, and the Institute for Basic Science (IBS). The findings were published online in Science Advances on May 2, 2025.

Journal Reference

Jong Ik Kwon, Ji Su Kim, Hyo Jin Seung, et al., "In-sensor multilevel image adjustment for high-clarity contour extraction using adjustable synaptic phototransistors," Sci., Adv., (2025).