Researchers at the Institute of Science Tokyo have developed a groundbreaking neural-network-based 3D imaging technique that can precisely measure moving objects-a task long considered extremely challenging for conventional optical systems. Presented at the International Conference on Computer Vision (ICCV) 2025, the new neural inverse rendering method reconstructs high-resolution 3D shapes using only three projection patterns, enabling dynamic 3D measurement across diverse applications in manufacturing inspection, digital twin modeling, and performance capture in visual production.

Neural Inverse Rendering for Accurate, High-Precision 3D Measurements of Moving Objects

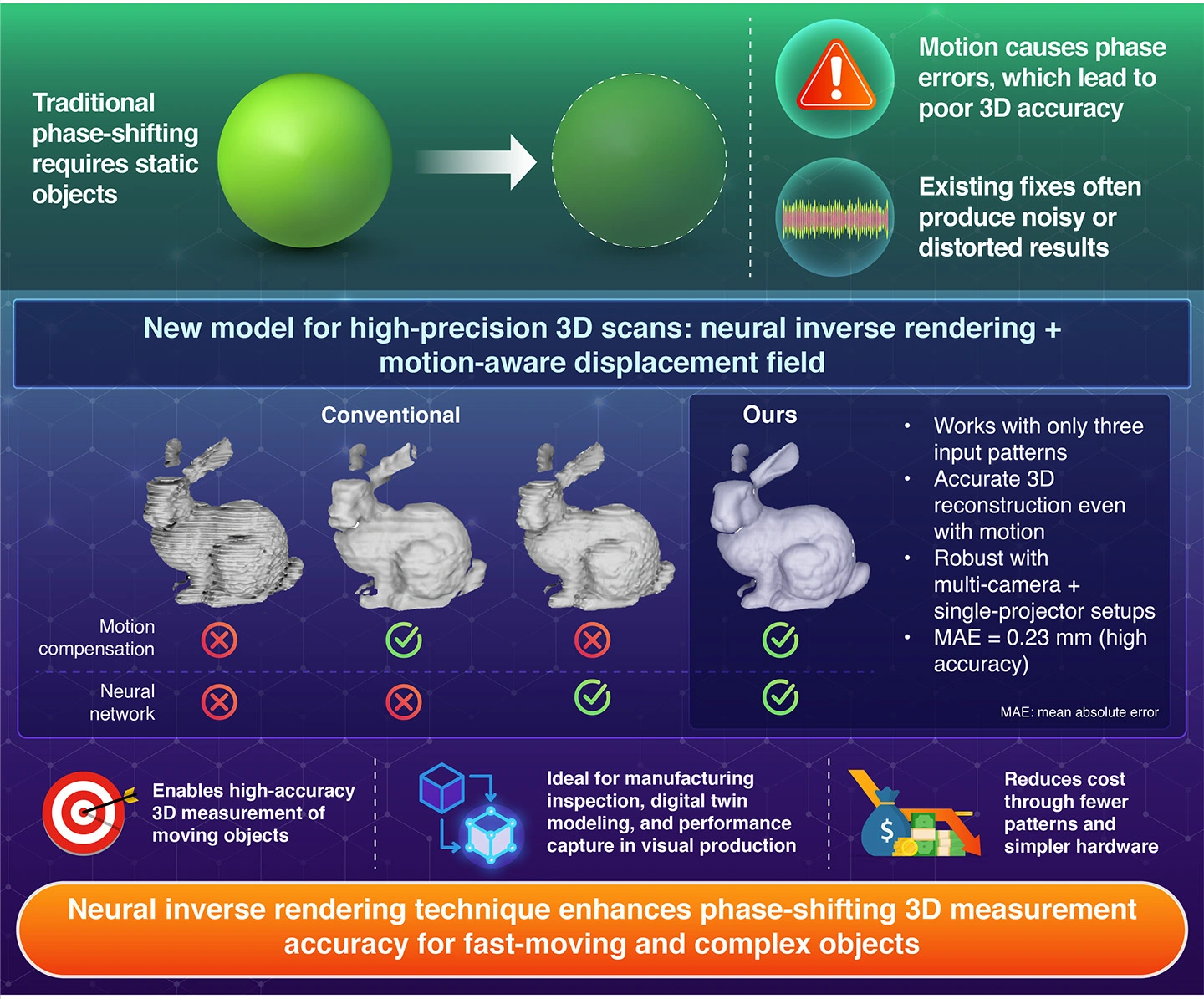

Among various optical approaches, structured light methods-which project patterns onto an object and analyze the reflected light to reconstruct its 3D shape-are widely used due to their precision and versatility. However, the widely adopted phase-shifting method struggles when objects move even slightly during the process. Conventional 3D imaging systems, therefore, often fail when object movement causes distortions between projected and captured patterns, thereby restricting their applications, ranging from manufacturing inspection and cultural heritage archiving to digital twin modeling and performance capture in visual production.

To address this issue, researchers have sought new ways to overcome the motion-induced errors that degrade optical 3D measurements. When the phase-shifting method projects multiple sinusoidal patterns sequentially, even slight object motion disrupts the alignment between patterns, leading to blurred or inaccurate 3D reconstructions. This motion sensitivity has therefore become a key bottleneck for high-precision 3D imaging in dynamic environments.

Recognizing these limitations, a research team led by then-graduate student Yuki Urakawa and Associate Professor Yoshihiro Watanabe of the Department of Information and Communications Engineering, School of Engineering, Institute of Science Tokyo, Japan, developed a novel approach to accurately measure the shapes of moving objects. Their findings were presented at the Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) 2025 on October 23, 2025, one of the world's leading venues for cutting-edge computer vision research.

"Structured light methods are a well-established approach in optical 3D measurement," Watanabe explains. "However, because the widely used phase-shifting method requires multiple sequential projections, it has a fundamental difficulty handling motion. This challenge motivated us to develop a technique capable of capturing accurate 3D shapes even when objects are moving."

The team proposed a neural-network-based framework that simultaneously models object motion and 3D geometry within the context of inverse rendering. Unlike conventional phase-shifting techniques that assume a stationary object, this neural inverse rendering approach jointly reconstructs both the motion and geometry of a moving target, allowing more precise 3D imaging in dynamic scenes.

In their method, the motion of the object is represented as a displacement field, and both the displacement field and the 3D shape are jointly optimized using a neural network. This innovative design enables correction of motion-induced discrepancies in the projected and captured images, allowing accurate 3D reconstruction even of moving targets.

To demonstrate the method's effectiveness, the researchers implemented their system in a multi-view setup using a single projector and two cameras. Remarkably, they achieved high-resolution and high-accuracy 3D measurements using only three standard sinusoidal projection patterns-the same number typically required for stationary phase-shifting measurements.

As Watanabe reports, "Using only three standard sinusoidal projection patterns (the same type employed in conventional phase-shifting methods), we successfully achieved high-precision, high-resolution 3D shape reconstruction of moving objects."

This advancement marks a significant step forward in the field of optical metrology, enabling dynamic and accurate 3D capture with fewer projection patterns. By maintaining high precision while reducing the number of required projections, the method expands the possibilities for 3D measurement in scenarios involving motion or deformation.

According to Watanabe, "This breakthrough technology enables dynamic, high-accuracy 3D measurement with minimal projection patterns, opening up new possibilities for applications in manufacturing inspection, digital twin modeling, and performance capture in visual production."

By merging deep learning with established principles of structured light, the research team at Science Tokyo has effectively extended the reach of optical measurement to dynamic environments. Their neural inverse rendering technique provides a foundation for next-generation 3D sensing technologies capable of operating in fast-moving or unstable settings without compromising measurement precision.

This achievement underscores Science Tokyo's ongoing leadership at the intersection of AI, imaging, and engineering, and highlights the growing role of neural modeling in solving real-world measurement problems.

For a clearer visual understanding of the results, watch the demonstration video on YouTube

Reference

- Authors:

- Yuki Urakawa and Yoshihiro Watanabe*

- Title:

- Neural Inverse Rendering for High-Accuracy 3D Measurement of Moving Objects with Fewer Phase-Shifting Patterns

- Journal:

- International Conference on Computer Vision 2025

- Affiliations:

- Department of Information and Communications Engineering, Institute of Science Tokyo, Japan