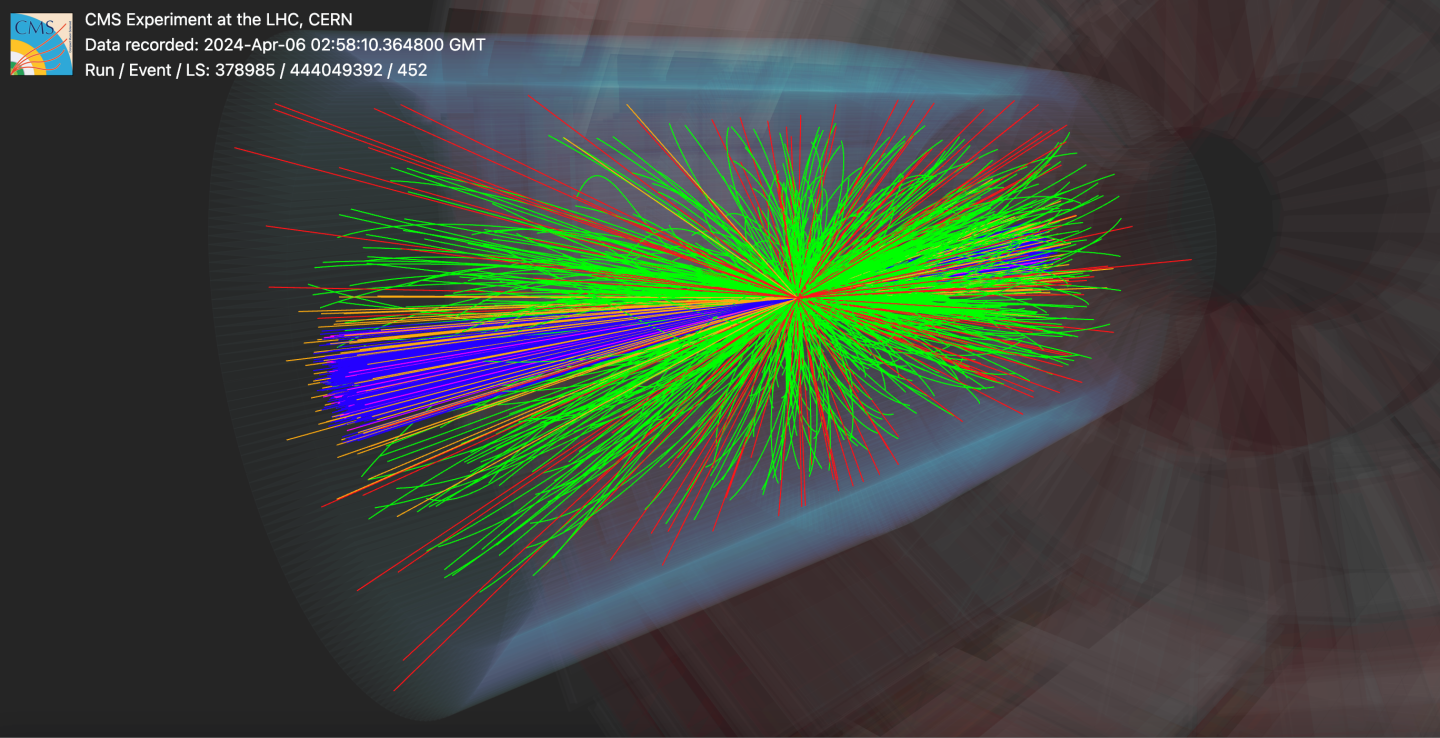

A particle collision reconstructed using the new CMS machine-learning-based particle-flow (MLPF) algorithm. The HFEM and HFHAD signals come from the forward calorimeters, which measure energy from particles travelling close to the beamline. (Image: CMS)

The CMS Collaboration has shown, for the first time, that machine learning can be used to fully reconstruct particle collisions at the LHC. This new approach can reconstruct collisions more quickly and precisely than traditional methods, helping physicists better understand LHC data.

Each proton-proton collision at the LHC sprays out a complex pattern of particles that must be carefully reconstructed to allow physicists to study what really happened. For more than a decade, CMS has used a particle-flow (PF) algorithm, which combines information from the experiment's different detectors, to identify each particle produced in a collision. Although this method works remarkably well, it relies on a long chain of hand-crafted rules designed by physicists.

The new CMS machine-learning-based particle-flow (MLPF) algorithm approaches the task fundamentally differently, replacing much of the rigid hand-crafted logic with a single model trained directly on simulated collisions. Instead of being told how to reconstruct particles, the algorithm learns how particles look in the detectors, like how humans learn to recognise faces without memorising explicit rules.

When benchmarked using data mimicking that from the current LHC run, the performance of the new machine-learning algorithm matched that of the traditional algorithm and, in some cases, even exceeded it. For example, when tested on simulated events in which top quarks were created, the algorithm improved the precision with which sprays of particles - known as jets - were reconstructed by 10-20% in key particle momentum ranges.

The new algorithm also allows a collision to be fully reconstructed far more quickly than before, because it can run efficiently on modern electronic chips known as graphics processing units (GPUs). Traditional algorithms typically need to run on central processing units (CPUs), which are often slower than GPUs for such tasks.

"New uses of machine learning could make data reconstruction more accurate and directly benefit CMS measurements, from precision tests of the Standard Model to searches for new particles," says Joosep Pata, lead developer of the new MLPF algorithm. "Ultimately, our goal is to get the most information out of the experimental data as efficiently as possible."

While the new algorithm was tested under current LHC data conditions, it is predicted to be even more useful for data from the High-Luminosity LHC. Due to start running in 2030, the LHC upgrade will deliver approximately five times more particle collisions, posing a significant challenge to the LHC experiments. By teaching detectors to learn directly from data, physicists are not just improving performance, they are redefining what is possible in experimental particle physics.