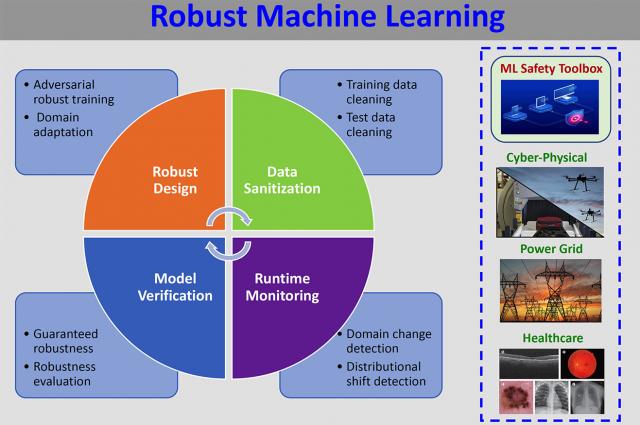

Themost prestigious machine learning conference in the world, The Conference on Neural Information Processing Systems (NeurIPS), is featuring two papers advancing the reliability of deep learning for mission-critical applications at Lawrence Livermore National Laboratory. Shown is a robust machine learning life cycle. Image courtesy of Bhavya Kailkhura

The 34th Conference on Neural Information Processing Systems (NeurIPS) is featuring two papers advancing the reliability of deep learning for mission-critical applications at Lawrence Livermore National Laboratory (LLNL). The most prestigious machine learning conference in the world, NeurIPS began virtually on Dec. 6.

The first paper describes a framework for understanding the effect of properties of training data on the generalization gap of machine learning (ML) algorithms - the difference between a model's observed performance during training versus its "ground-truth" performance in the real world. Borrowing ideas from statistical mechanics, an LLNL team linked the performance gap to the power spectrum of the training sample design. They corroborated the findings on experiments with deep neural networks (DNNs) on synthetic functions and a complex simulator for inertial confinement fusion, finding that sample designs with optimized spectral properties could provide greater scientific insight using less resources.

"The most important question in applying ML to emerging scientific applications is identifying which training data points you should be collecting," said lead author and LLNL computer scientist Bhavya Kailkhura, who began the work as a summer student intern at the Lab. "This paper basically answers the question of what simulations and experiments we should be running so we can create a DNN that generalizes well to any future data we might encounter. Agnostic of the specific scientific application, these optimized sample designs can help in obtaining deeper scientific insights than previously possible under a given sample (or compute) budget."

In the paper, the team showed that sample designs such as blue noise and Poisson disk sampling could outperform popular uniform designs with respect to the generalization gap. In addition to fundamentally expanding understanding of scientific machine learning, the framework provides precise theoretical insight into DNN performance, Kailkhura said.

"This is probably the first paper that proposes a theory of how training data affects the generalization performance of your machine learning model - currently no other framework exists that can answer this question," Kailkhura said. "To achieve this, we needed to come up with completely new ways of thinking about this problem."

Researchers said the framework could have a major impact on a wide range of applications, including stockpile stewardship, engineering design and optimization or any other scientific application that requires simulation, particularly those that need "ensembling," where scientists must select many different, potentially uncertain data points and run the risk of the output not being generally applicable.

"We want to explore the behavior of the simulation through a range of parameters, not just one, and we want to know how that thing is going to react for all of them," said co-investigator Timo Bremer. "[Scientists] want to run as few simulations as possible to get the best understanding of what outcomes to expect. This paper gives you the optimal way of figuring out which simulations you need to run to get the maximum insight. We can prove that those simulations statistically will give you the most information for your particular problem."

For the ICF simulator experiments, the team noticed that certain variables of interest required enormous amounts of data in order to use the ML functions. By optimizing their experiments using the framework, scientists would reduce errors while using 50 percent fewer simulations or experiments to collect the training data, the team reported.

Researchers said they expect the framework will play an important role in several ML problems and will be helpful in determining what kinds of scientific problems can't be solved with neural networks, where the sample size of data needed is too large to be practical.

"There comes a point where you might as well pick randomly and hope you get lucky, but don't expect any guarantees or generalization because you don't have enough resources," Bremer said. "Essentially what we are showing here is the order of magnitude of experiments or simulations you would need in order for you to make any kind of claim."

Researchers said they are analyzing the generalization gap in other project areas such as material sciences and will look to higher quality sample designs than currently possible.

A Laboratory Directed Research and Development (LDRD) project led by LLNL computer scientist and co-author Jayaraman Thiagarajan funded the work. Other co-authors included LLNL computer scientist Jize Zhang, Qunwei Li of Ant Financial and Yi Zhou of the University of Utah.

Guaranteeing robustness in deep learning neural networks

For the second NeurIPS paper, a team including LLNL's Kailkhura and co-authors at Northeastern University, China's Tsinghua University and the University of California, Los Angeles developed an automatic framework to obtain robustness guarantees of any deep neural network structure using Linear Relaxation-based Perturbation Analysis (LiRPA).

LiRPA has become a go-to element for the robustness verification of deep neural networks, which have historically been susceptible to small perturbations or changes in inputs. However, the approach has been prevented from widespread usage in the general machine learning community and industry by the lack of a sweeping, easy-to-use tool.

Kailkhura called the new framework a "much-needed first step" for Lab mission-critical and "high regret" applications, such as stockpile stewardship, health diagnostics or collaborative autonomy.

"The goal is to understand how robust these deep neural networks are and come up with useful tools that are so easy that a non-expert can use it," Kailkhura explained. "We have applications in the Lab where DNNs are trying to solve high-regret problems, and incorrect decisions could endanger safety or lead to a loss in resources. In this paper we ask, 'How can we make these neural networks provably more robust? And can we guarantee that a DNN will not give a wrong prediction under a certain condition?' These are desirable features to have, but answering these questions is incredibly hard given the complexity of deep neural networks."

In the past, LiRPA-based methods have only considered simple networks, requiring dozens of pages of mathematical proofs for a single architecture, Kailkhura explained. However, at LLNL, where machine learning problems are incredibly complex and employ numerous types of neural network architectures, such labor and time is not feasible.

Researchers said the new framework simplifies and generalizes LiRPA-based algorithms to their most basic form and enables loss fusion, significantly reducing the computational complexity and outperforming previous works on large datasets. The team was able to demonstrate LiRPA-based certified defense on Tiny ImageNet and Downscaled ImageNet, where previous approaches have not been able to scale. The framework's flexibility, differentiability and ease of use also allowed the team to achieve state-of-the-art results on complicated networks like DenseNet, ResNeXt and Transformer, they reported.

"This is about coming up with computational tools that are generic enough so that any neural network architecture you come up with in the future, they would still be applicable," Kailkhura said. "The only thing you need is a neural network represented as a compute graph, and with just a couple of lines of code you can find out how robust it would be. And while training the DNN, you can preemptively take these guarantees into account and can design your DNN to be certifiably robust."

To help broaden adoption in the general machine learning community, the team has made the tool available on the open source repository.

Funded by an LDRD project led by Kailkhura, the project completed its first year and is continuing into the next two years by exploring more complex applications and looking at much larger perturbations encountered in practice. The work is motivated by Lab projects such as collaborative autonomy, where scientists are investigating the use of AI and DNNs with swarms of drones so they can communicate and fly with zero or minimal human assistance, but with safety guarantees to prevent them from colliding with each other or their operators.

Taken together, the two NeurIPS papers are indicative of LLNL's overall strategy in making AI and deep learning trustworthy enough to be used confidently with mission-critical applications and answer fundamental questions that can't be answered with other approaches, Bremer said.

"The key for Livermore is that these guarantees are going to be crucial if we ever want to move DNNs into our applications," Bremer said. "Everybody wants to use ML because it has so many advantages, but without solving these problems, it's difficult to see how you could deploy these systems in situations where it actually matters. Suddenly, this need for fundamental research has become much greater in AI because it's so fast-moving. These papers are the first of many things the Lab is doing to get there."