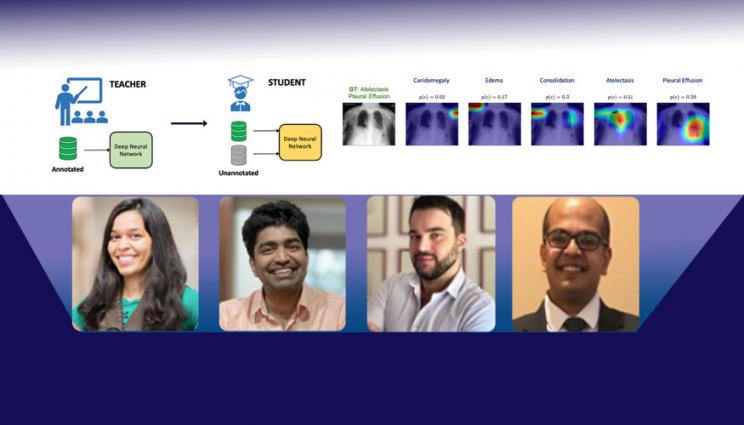

Lawrence Livermore National Laboratory computer scientist Jay Thiagarajan (second from left) and colleagues from IBM Research have developed a "self-training" deep learning approach that addresses common challenges in the adoption of artificial intelligence for disease diagnosis. The team won a Best Paper award for Computer-Aided Diagnosis for the work at the recent SPIE Medical Imaging Conference.

New work by computer scientists at Lawrence Livermore National Laboratory (LLNL) and IBM Research on deep learning models to accurately diagnose diseases from X-ray images with less labeled data won the Best Paper award for Computer-Aided Diagnosis at the SPIE Medical Imaging Conference on Feb. 19.

The technique, which includes novel regularization and "self-training" strategies, addresses some well-known challenges in the adoption of artificial intelligence (AI) for disease diagnosis, namely the difficulty in obtaining abundant labeled data due to cost, effort or privacy issues and the inherent sampling biases in the collected data, researchers said. AI algorithms also are not currently able to effectively diagnose conditions that are not sufficiently represented in the training data.

LLNL computer scientist Jay Thiagarajan said the team's approach demonstrates that accurate models can be created with limited labeled data and perform as well or even better than neural networks trained on much larger labeled datasets. The paper, published by SPIE, included co-authors at IBM Research Almaden in San Jose.

"Building predictive models rapidly is becoming more important in health care," Thiagarajan explained. "There is a fundamental problem we're trying to address. Data comes from different hospitals and it's difficult to label - experts don't have the time to collect and annotate it all. It's often posed as a multi-label classification problem, where we are looking at the presence of multiple diseases in one shot. We can't wait to have enough data for every combination of disease conditions, so we built a new technique that tries to compensate for this lack of data using regularization strategies that can make deep learning models much more efficient, even with limited data."

In the paper, the team describes a framework that utilizes strategies including data augmentation, confidence tempering and self-training, where an initial "teacher" model learns exclusively using labeled imaging data, and then trains a second-generation "student" model using both labeled data and additional unlabeled data, based on guidance from the teacher. This second-generation model performs better than the teacher model, Thiagarajan explained, because it sees more data, and the teacher is able to provide pseudo-supervision. However, such an approach can be prone to confirmation bias (i.e. incorrect guidance by the teacher), which is addressed by confidence tempering and data augmentation strategies.

The team applied their learning approach to benchmark datasets of chest X-rays containing both labeled and unlabeled data to diagnose five different heart conditions: cardiomegaly, edema, consolidation, atelectasis and pleural effusion. The researchers saw a reduction of 85 percent in the amount of labeled data required to achieve the same performance as the existing state-of-the-art in neural networks trained on the entire labeled dataset. That's important in the clinical application of AI where collecting labeled data can be extremely challenging, Thiagarajan said.

"When you have limited data, improving the capability of models to handle data it hasn't seen before is the key aspect we have to consider when solving limited data problems," he explained. "It's not about picking Model X versus Model Y, it's about fundamentally changing the way we train these models, and there's a lot more work that needs to be done in this space for us to achieve meaningful diagnosis models for real-world use cases in health care."

Thiagarajan cautioned that while the technique is broadly applicable, the findings won't necessarily apply to every medical classification or segmentation problem. However, he added, it is a "promising first step" to democratizing AI models - creating models capable of applying to a broad range of disease conditions, between common and rare types. Ultimately, an effective model would need to be trained on limited labeled data, generalize to a wide range of conditions and support simultaneous prediction of multiple diseases, Thiagarajan added.

Thiagarajan said the team's next steps are to use domain knowledge to improve the proposed framework and address class imbalances by further exploration of data augmentation and exposing the model to more variations, thus enabling them to be more broadly applicable.

This work was carried out in a project funded by the Department of Energy's Advanced Scientific Computing Research program.

Co-authors included Deepta Rajan, Alexandros Karargyris and Satyananda Kashyap of IBM Research.