Rendering an object invisible with an invisibility cloak is a long-cherished dream for humanity and in the past decade it has riveted immense attention due to the advent of metamaterials. On March 24, the journal of Nature Photonics published the latest research conducted by the research team led by Prof. CHEN Hongsheng from the College of Information Science and Electronic Engineering, Zhejiang University. They proposed the concept of a self-adaptive cloak driven by deep learning without human intervention.

In recent years, research into invisibility methods has undergone a boom thanks to the spectacular advances in transformation optics and synthetic electromagnetic(EM) materials. A transformation optics-based cloak produces invisibility through the precise design of its constitutive parameters so as to guide the flow of light around a hidden object, but it is a formidable challenge to experimentally achieve bulky material compositions with both anisotropy and inhomogeneity.

An ideal invisibility cloak should rapidly and automatically adjust its internal structure-its active components-to remain invisible at all times even when exposed to an external stimulus or a non-stationary environment, as if the cloak were endowed with a chameleon's ability. This highly desirable but not easily attainable property-intelligent or self-adaptability-must be developed so as to be applied to a wide range of real-time applications involving moving objects and in situ environments.

"The octopus, as an amazing master of disguise, can craft its quick-change effect thanks to its unique chromatophores, iridophores and leucophores in the skin. Similarly, we can incorporate tunable metamaterials, deep learning, and EM waves in the design of our cloak," said QIAN Chao, lead author of the study.

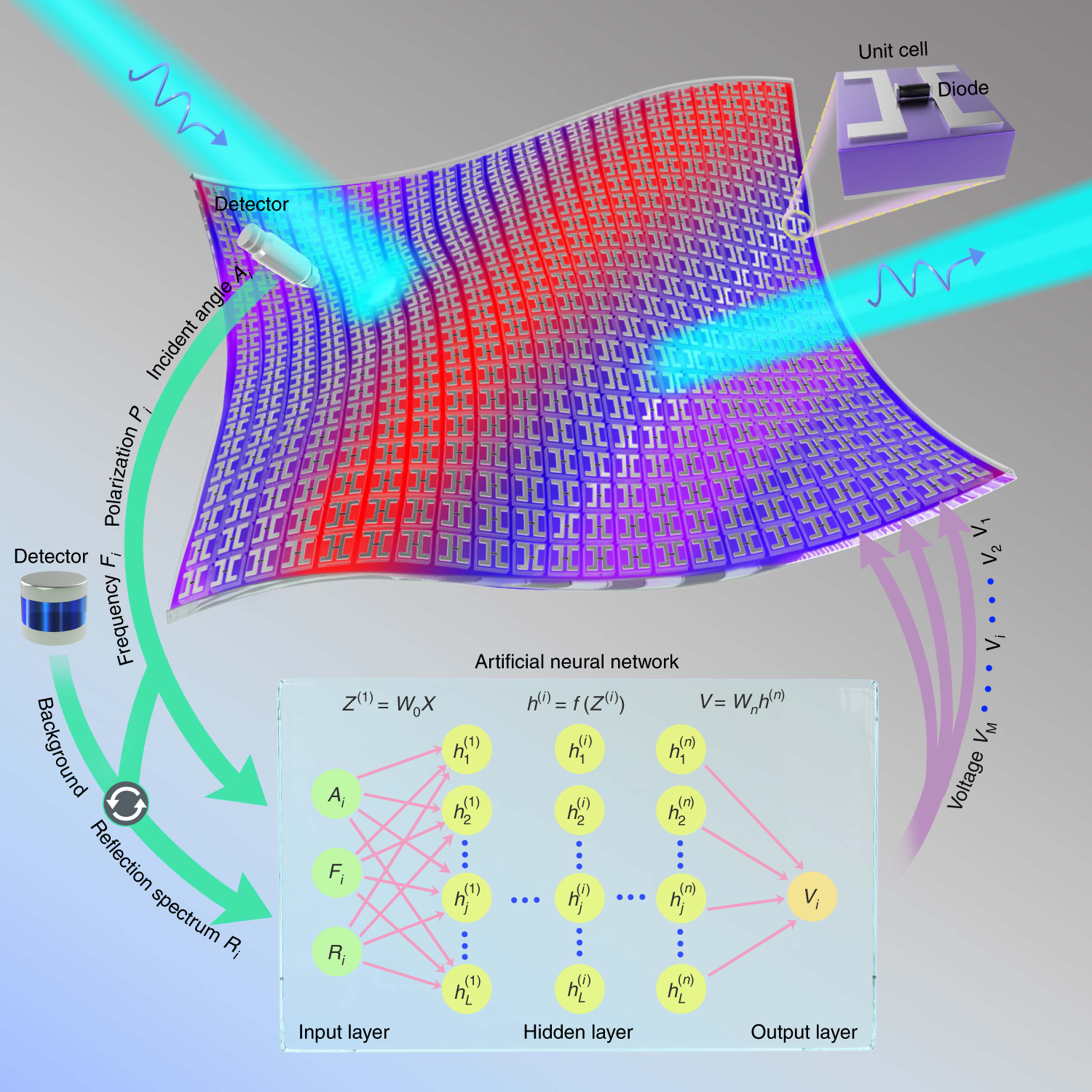

However, it is easier said than done to set up a whole set of intelligent invisibility system from scratch. With persistent and determined efforts in the past three years, the research team developed an intelligent invisibility cloak driven by deep learning with a tunable metasurface. The reflection property of each element inside the metasurface can be independently tuned by feeding different direct-current bias voltages across a loaded varactor diode at microwave frequencies.Embedded with a pre-trained artificial neural network (ANN), a fundamental building block of deep learning, the metasurface cloak can respond rapidly, on a millisecond timescale, to the ever-changing incident wave and surrounding background, without any human intervention. All the bias voltages are automatically calculated and instantly supplied to the cloak. Researchers employed a finite-difference time-domain (FDTD) program with the pre-trained ANN to imitate a real-world scene and then benchmarked the performance via a proof-of-concept experiment.

Schematic of a deep-learning-enabled self-adaptive metasurface cloak

What is stunning about their chameleon-shaped plastic model is its superb cloaking capability. It can successfully offset the external illumination and blend itself into the ambient environment in less than 15 milliseconds while it normally takes approximately 6 seconds for a chameleon to camouflage itself.

This ground-breaking study brings the existing cloaking strategies closer to practical scenarios involving changing backgrounds, moving objects, multi-static detection and beyond, and it can be readily scaled up to higher frequencies. Moreover, the proposed concept will pave the way for new metadevices and other big data areas, such as streamlining the on-demand design of nanostructures, solving the EM inverse problem and capturing latent physical knowledge. Compared with conventional adaptive optics, optical ANNs using nanophotonic circuits and metasurfaces could drastically enhance the computing speed and ultimately power efficiency.