As the self-driving car travels down a dark, rural road, a deer lingering among the trees up ahead looks poised to dart into the car's path. Will the vehicle know exactly what to do to keep everyone safe?

Some computer scientists and engineers aren't so sure. But researchers at the University of Virginia School of Engineering and Applied Science are hard at work developing methods they hope will bring greater confidence to the machine-learning world - not only for self-driving cars, but for planes that can land on their own and drones that can deliver your groceries.

The heart of the problem stems from the fact that key functions of the software that guides self-driving cars and other machines through their autonomous motions are not written by humans - instead, those functions are the product of machine learning. Machine-learned functions are expressed in a form that makes it essentially impossible for humans to understand the rules and logic they encode, thereby making it very difficult to evaluate whether the software is safe and in the best interest of humanity.

Researchers in UVA's Leading Engineering for Safe Software Lab - the LESS Lab, as it's commonly known - are working to develop the methods necessary to provide society with the confidence to trust emerging autonomous systems.

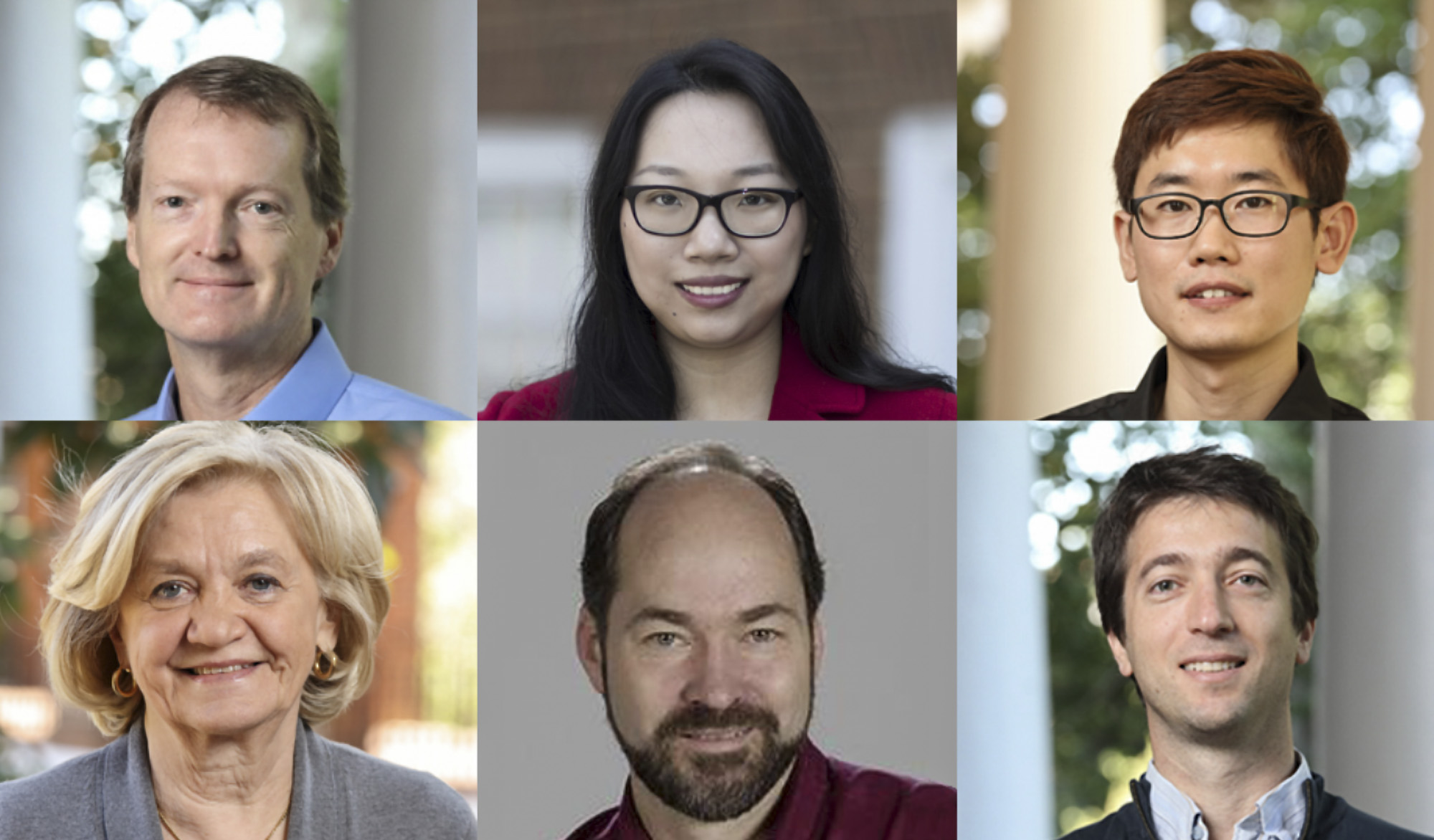

The team's researchers are Matthew B. Dwyer, Robert Thomson Distinguished Professor; Sebastian Elbaum, Anita Jones Faculty Fellow and Professor; Lu Feng, assistant professor; Yonghwi Kwon, John Knight Career Enhancement Assistant Professor; Mary Lou Soffa, Owens R. Cheatham Professor of Sciences; and Kevin Sullivan, associate professor. All hold appointments in the UVA Engineering Department of Computer Science. Feng holds a joint appointment in the Department of Engineering Systems and Environment.

Since its creation in 2018, the LESS Lab has rapidly grown to support more than 20 graduate students, publish more than 50 publications and obtain competitive external awards totaling more than $10 million in research funding. The awards have come from such agencies as the National Science Foundation, the Defense Advanced Research Projects Agency, the Air Force Office of Scientific Research and the Army Research Office.

The lab's growth trajectory matches the scope and urgency of the problem these researchers are trying to solve.

The Machine-Learning Explosion

An inflection point in the rapid rise of machine learning happened just a decade ago when computer vision researchers won the ImageNet Large Scale Visual Recognition Challenge - to identify objects in photos - using a machine-learning solution. Google took notice and quickly moved to capitalize on the use of data-driven algorithms.

Other tech companies followed suit, and public demand for machine-learning applications snowballed. Last year, Forbes estimated that the global machine-learning market grew at a compound annual growth rate of 44% and is on track to become a $21 billion market by 2024.

But as the technology ramped up, computer scientists started sounding the alarm that mathematical methods to validate and verify the software were lagging.

Government agencies like the Defense Advanced Research Projects Agency responded to the concerns. In 2017, DARPA launched the Assured Autonomy program to develop "mathematically verifiable approaches" for assuring an acceptable level of safety with data-driven, machine-learning algorithms.

UVA Engineering's Department of Computer Science also took action, extending its expertise with a strategic cluster of hires in software engineering, programming languages and cyber-physical systems. Experts combined efforts in cross-cutting research collaborations, including the LESS Lab, to focus on solving a global software problem in need of an urgent solution.

A Canary in the Coal Mine

By this time, the self-driving car industry was firmly in the spotlight and the problems with machine learning were becoming more evident. A fatal Uber crash in 2018 was recorded as the first human death from an autonomous vehicle.

"The progress toward practical autonomous driving was really fast and dramatic, shaped like a hockey stick curve, and much faster than the rate of growth for the techniques that can ensure their safety," Elbaum said.

This August, tech blog Engadget reported that one self-driving car company, Waymo, had put in 20 million miles of on-the-road testing. Yet, devastating failures continue to occur; as the software has gotten more and more complex, no amount of real-world testing would be enough to find all the bugs.

And the software failures that have occurred have made many wary of on-the-road testing.

"Even if a simulation works 100 times, would you jump into an autonomous car and let it drive you around? Probably not," Dwyer said. "Probably you want some significant on-the-street testing to further increase your confidence. And at the same time, you don't want to be the one in the car when that test goes on."

And then there is the need to anticipate - and test - every single obstacle that might come up in the 4-million-mile public roads network.

"Think about the complexities of a particular scenario, like driving on the highway with hauler trucks on either side so that an autonomous vehicle needs to navigate crosswinds at high speeds and around curves," Dwyer said. "Getting a physical setup that matches that challenging scenario would be difficult to do in real-world testing. But in simulation that can get that much easier."

And that's one area where LESS Lab researchers are making real headway. They are developing sophisticated virtual reality simulations that can accurately create such difficult scenarios that might otherwise be impossible to test on the road.

"There is a huge gap between testing in the real world versus testing in simulation," Elbaum said. "We want to close that gap and create methods in which we can expose autonomous systems to the many complex scenarios they will face. This will substantially reduce testing time and cost, and it is a safer way to test."

Having simulations so accurate they can stand in for real-world driving would be the equivalent of rocket fuel for the huge amount of sophisticated testing it will take to fix the timeline and human cost problem. But it is just half of the solution.

The other half is developing mathematical guarantees that can prove the software will do what it is supposed to do all the time. And that will require a whole new set of mathematical frameworks.

Out With the Old, in With the New

Before machine learning, engineers would write explicit, step-by-step instructions for the computer to follow. The logic was deterministic and absolute, so humans could use formal mathematical rules that existed to test the code and guarantee it worked.

"So if you really wanted a property that the autonomous system only makes sharp turns when there is an obstacle in front of it, before machine learning, mechanical engineers would say, 'I want this to be true,' and software engineers would build that rule into the software," Dwyer said.

Today, the computer continuously improves a probability that an algorithm will produce an expected outcome by being fed images of examples to learn from. The process is no longer based on absolutes, following rules a human can understand.

"With machine learning, you can give an autonomous system examples of sharp turns only with obstacles in front of it," Dwyer said. "But that doesn't mean that the computer will learn and write a program that guarantees that is always true. Previously, humans checked the rules they codified into systems. Now we need a new way to check that a program the machine wrote observes the rules."

Elbaum stresses that there are still a lot of open-ended questions that need to be answered in the quest for concrete, tangible methods. "We are playing catch-up to ensure these systems do what they are supposed to do," he said.

That is why the combined strength of the LESS Lab's faculty and students is critically important for speeding up the discovery. Just as important is the commitment of the LESS Lab in working collectively and in concert with other UVA experts on machine learning and cyber-physical systems to enable a future where people can trust autonomous systems.

The lab's mission could not be more relevant if we are ever to realize the promise of self-driving cars, much less eliminate the fears of a deeply skeptical public.

"If your autonomous car is driving on a sunny day down the road with no other cars, it should work," Dwyer said. "If it's rainy and it's at night and it's crowded and there is a deer on the side of the road, it should also work, and in every other scenario.

"We want to make it work all the time, for everybody, and in every context."