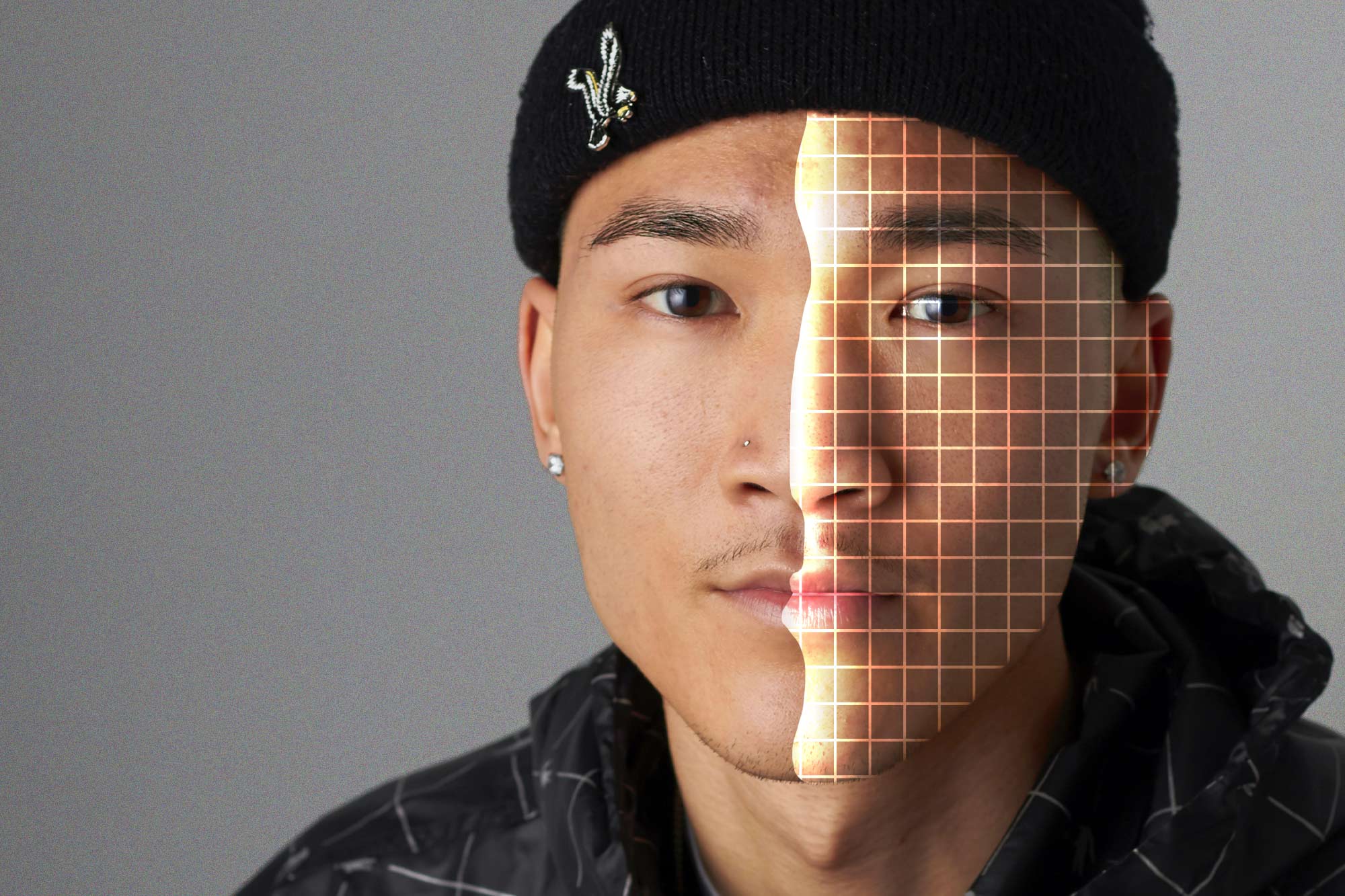

The kind of AI that powers facial recognition often falls short when identifying faces that aren't white. That can mean mistakes in things like criminal justice applications and security software. (Photo illustration by Emily Faith Morgan)

If you were to look out a window right now, your brain quickly would answer a series of questions. Does everything look as it usually does? Is anything out of place? If there are two people walking a dog nearby, your brain would catalogue them too. Do you know them? What do they look like? Do they look friendly?

We make these daily assessments almost without thinking - and often with our biases or assumptions baked in.

UVA data scientist Sheng Li is trying to improve the "deep learning" AI needs to become better. (Contributed photo)

Data scientists like Sheng Li imagine a world where artificial intelligence can make the same connections with the same ease, but without the same bias and potential for error.

Li, an artificial intelligence researcher and assistant professor in the University of Virginia's School of Data Science, directs the Reasoning and Knowledge Discovery Laboratory. There, he and his students investigate how to improve deep learning to make AI not only accurate, but trustworthy.

"Deep learning is a type of artificial intelligence, which learns from a large amount of data and then makes decisions," Li said. "Deep learning techniques have been widely adopted by many applications in our daily life, such as face recognition, virtual assistants and self-driving cars."

AI's mistakes and shortcomings have been well chronicled, leading to the kinds of integrity gaps Li wants to close. One recent study, for example, found that facial recognition technology far more often misidentifies Black or Asian faces compared to white faces, and falsely identifies women more often than men. This could have very serious real-world consequences in criminal investigations or if verification software repeatedly failed to recognize someone.

"We can mitigate the facial recognition bias by incorporating fairness constraints," Li said. "In addition to looking at overall accuracy across the population, we can also look at accuracy for individual groups, defined by sensitive attributes such as age, gender or some racial groups."

Li first became interested in artificial intelligence when he was selecting university programs in China. There, he earned a bachelor's degree in computer science and a master's degree in information security from Nanjing University of Posts and Telecommunications before pursuing a doctorate in computer engineering at Northeastern University. He joined one of his undergraduate professors in working on image processing and facial recognition.

"We were working on facial recognition and palmprint recognition. We designed mathematical models to extract features from images to recognize the identity of the person," Li said. "Now, when I see the paper, our model seems very simple, but at that time it was very cool to me."

Ultimately, Li came to focus not just on the accuracy of artificial intelligence models, but on their trustworthiness and other attributes such as fairness.

"When we apply machine learning in reality, we must take those things into account as well," he said. "Is it fair? Is it robust? Is it accountable?"

Li and his lab are also focused on another potential minefield: how to teach AI models that "correlation does not imply causation," as the saying goes.

Let's say the dog outside your home started barking. You might quickly realize that the dog is barking at a squirrel. An AI algorithm would not know if the dog was barking at the squirrel, at you, or at another, unseen threat.

"As humans, we can reason through something and think about what the true cause of a phenomena is," discarding factors that are merely correlations, Li said. "To teach a model a similar thing is very, very challenging."

Statisticians have studied a similar problem for decades, Li said, but machine learning research has turned its attention to causal inference over the last 10 years. Li's group has been studying the problem for about eight years, with several publications and tutorials done and a book, which Li co-edited, publishing next year.

"We are working on teaching the machine to not only look at and recognize the different objects, but infer pairwise relationships and detect anomalies in a trustworthy way," he said.

It is a challenging goal, but an exciting one as well, if scientists can in fact equip AI to make better decisions.

"Trustworthiness is the major focus of my lab," Li said, because "these algorithms could ultimately help us make better decisions in an open and dynamic environment."