When someone opens the door and enters a hospital room, wearing a stethoscope is a telltale sign that they're a clinician. This medical device has been around for over 200 years and remains a staple in the clinic despite significant advances in medical diagnostics and technologies.

Authors

- Valentina Dargam

Research Assistant Professor of Biomedical Engineering, Florida International University

- Joshua Hutcheson

Associate Professor of Biomedical Engineering, Florida International University

The stethoscope is a medical instrument used to listen to and amplify the internal sounds produced by the body. Physicians still use the sounds they hear through stethoscopes as initial indicators of heart or lung diseases. For example, a heart murmur or crackling lungs often signify an issue is present. Although there have been significant advances in imaging and monitoring technologies, the stethoscope remains a quick, accessible and cost-effective tool for assessing a patient's health.

Though stethoscopes remain useful today, audible symptoms of disease often appear only at later stages of illness . At that point, treatments are less likely to work and outcomes are often poor. This is especially the case for heart disease, where changes in heart sounds are not always clearly defined and may be difficult to hear.

We are scientists and engineers who are exploring ways to use heart sounds to detect disease earlier and more accurately. Our research suggests that combining stethoscopes with artificial intelligence could help doctors be less reliant on the human ear to diagnose heart disease, leading to more timely and effective treatment.

History of the stethoscope

The invention of the stethoscope is widely credited to the 19th-century French physician René Theophile Hyacinthe Laënnec. Before the stethoscope, physicians often placed their ear directly on a patient's chest to listen for abnormalities in breathing and heart sounds.

In 1816, a young girl showing symptoms of heart disease sought consultation with Laënnec. Placing his ear on her chest, however, was considered socially inappropriate. Inspired by children transmitting sounds through a long wooden stick, he instead rolled a sheet of paper to listen to her heart. He was surprised by the sudden clarity of the heart sounds, and the first stethoscope was born.

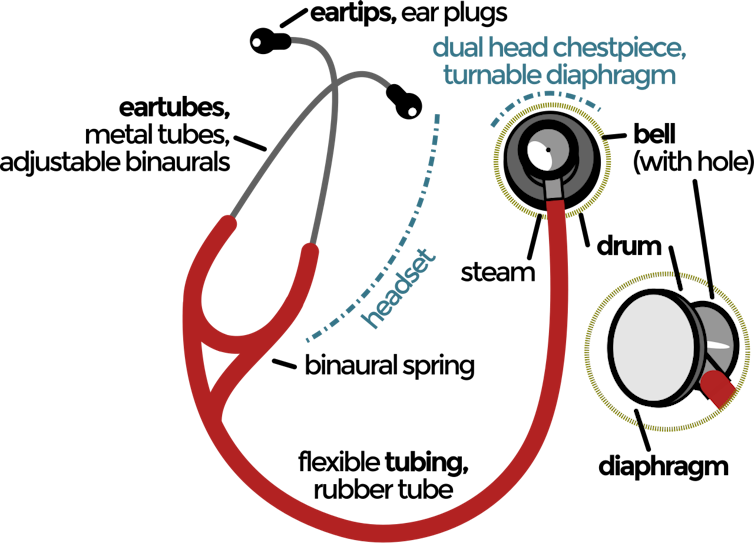

Over the next couple of decades, researchers modified the shape of this early stethoscope to improve its comfort, portability and sound transmission. This includes the addition of a thin, flat membrane called a diaphragm that vibrates and amplifies sound.

The next major breakthrough occurred in the mid-1850s, when Irish physician Arthur Leared and American physician George Philip Cammann developed stethoscopes that could transmit sounds to both ears. These binaural stethoscopes use two flexible tubes connected to separate earpieces, allowing clearer and more balanced sound by reducing outside noise.

These early models are remarkably similar to the stethoscopes medical doctors use today, with only slight modifications mainly designed for user comfort.

Listening to the heart

Medical schools continue to teach the art of auscultation - the use of sound to assess the function of the heart, lungs and other organs. Digital models of stethoscopes, which have been commercially available since the early 2000s, offer new tools like sound amplification and recording - yet the basic principle that Laënnec introduced endures.

When listening to the heart, doctors pay close attention to the familiar "lub-dub" rhythm of each heartbeat . The first sound - the lub - happens when the valves between the upper and lower chambers of the heart close as it contracts and pushes blood out to the body. The second sound - the dub - occurs when the valves leading out of the heart close as the heart relaxes and refills with blood.

Along with these two normal sounds, doctors also listen for unusual noises - such as murmurs, extra beats or clicks - that can point to problems with how blood is flowing or whether the heart valves are working properly.

Heart sounds can vary greatly depending on the type of heart disease present . Sometimes, different diseases produce the same abnormal sound. For example, a systolic murmur - an extra sound between first and second heart sounds - may be heard with narrowing of either the aortic or pulmonary valve. Yet the very same murmur can also appear when the heart is structurally normal and healthy. This overlap makes it challenging to diagnose disease based solely on the presence of murmurs.

Teaching AI to hear what people can't

AI technology can identify the hidden differences in the sounds of healthy and damaged hearts and use them to diagnose disease before traditional acoustic changes like murmurs even appear. Instead of relying on the presence of extra or abnormal sounds to diagnose disease, AI can detect differences in sound that are too faint or subtle for the human ear to detect.

To build these algorithms, researchers record heart sounds using digital stethoscopes . These stethoscopes convert sound into electronic signals that can be amplified, stored and analyzed using computers. Researchers can then label which sounds are normal or abnormal to train an algorithm to recognize patterns in the sounds it can then use to predict whether new sounds are normal or abnormal.

Researchers are developing algorithms that can analyze digitally recorded heart sounds in combination with digital stethoscopes as a low-cost, noninvasive and accessible tool to screen for heart disease. However, a lot of these algorithms are built on datasets of moderate-to-severe heart disease. Because it is difficult to find patients at early stages of disease, prior to when symptoms begin to show, the algorithms don't have much information on what hearts in the earliest stages of disease sound like.

To bridge this gap, our team is using animal models to teach the algorithms to analyze heart sounds to find early signs of disease. After training the algorithms on these sounds, we assess its accuracy by comparing it with image scans of calcium buildup in the heart. Our research suggests that an AI-based algorithm can classify healthy heart sounds correctly over 95% of the time and can even differentiate between types of heart disease with nearly 85% accuracy. Most importantly, our algorithm is able to detect early stages of disease, before cardiac murmurs or structural changes appear.

We believe teaching AI to hear what humans can't could transform how doctors diagnose and respond to heart disease.

![]()

Valentina Dargam receives funding from Florida Heart Research Foundation and National Institute of Health.

Joshua Hutcheson receives funding from the Florida Heart Research Foundation, the American Heart Association, and the National Heart, Lung, and Blood Institute of the National Institutes of Health.