The words "joke" and "ruin" might not rhyme in English. But, thanks to a new, interactive database of American Sign Language (ASL), called ASL-LEX 2.0, we can now see that these two words do in fact rhyme in ASL.

"In ASL, each word has five linguistic parameters: handshape, movement, location, palm orientation, and non-manual signs. Rhymes involve repetition based on one or more of these parameters," says Michael Higgins, a first-year PhD student in Boston University's Wheelock College of Education & Human Development's Language & Literacy Education program. He is deaf, and has been using the ASL-LEX 2.0 database to investigate the relationship between ASL and English proficiency in deaf children.

Since launching in February 2021, in conjunction with a published paper highlighting the ways the database has expanded, ASL-LEX 2.0-now the largest interactive ASL database in the world-makes learning about the fundamentals of ASL easier and more accessible. "ASL-LEX 2.0 is an invaluable resource. Being able to access linguistic information-including the five parameters on every sign-in one place is enormously helpful," Higgins says.

"English speakers know cat and hat rhyme in English, and we have all kinds of resources for thinking about the properties of English, French, and many spoken languages, but at the outset we really didn't know much about the lexicon of ASL," says Naomi Caselli, a Wheelock assistant professor and researcher of deaf studies who helped create the database, and leads the LexLab. A lexicon is the vocabulary that makes up a language-for ASL, the lexicon describes the language's entire universe of movements and sign forms.

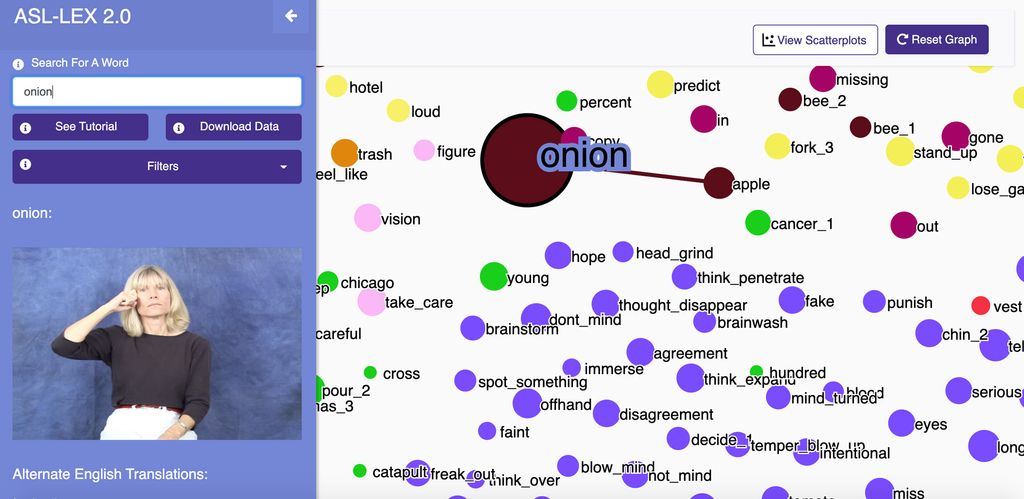

It took BU researchers and their collaborators Zed Sevcikova Sehyr and Karen Emmorey of San Diego State University, and Ariel Cohen-Goldberg of Tufts University, six years of work to create ASL-LEX 2.0, which improves upon an earlier version of the database created by the team, first released in 2016, called ASL-LEX. In 2017, ASL-LEX was awarded the Vizzie's People's Choice prize for best interactive visualization. With the help of a three-year grant from the National Science Foundation, the expanded 2.0 version of the original site is now bolstered with over 2,723 signs and more ways to visualize the ASL lexicon. Computer engineers from BU's Rafik B. Hariri Institute for Computing and Computational Science & Engineering played a major role in the project, working in collaboration with the researchers to rebuild the site from the ground up with more sophisticated tools for data visualizations. The database's collection of "phonology" or sign forms-in ASL, phonology refers to how a sign looks and is formed, whereas in verbal speech it refers to the sounds the speaker produces-is organized based on hand shapes, hand locations, and finger movements. Groups of signs, called "nodes," are then associated with one another based on whether or not they rhyme, or share patterns in how they are formed. Using colors to visually group similar phonologies together, ASL-LEX 2.0 allows users to navigate between nodes, almost like a map.

"There are so few resources on sign language out there that even just a list of the signs of ASL, in a curated format, was really useful to researchers, teachers, and people who are learning ASL," Caselli says. She grew up learning both English and ASL. Since the first version of ASL-LEX launched in 2016, ASL teachers have used it to develop nursery rhymes and word instruction, and researchers have used it to develop new studies investigating how kids and adults perceive and learn signs.

"With deaf children, there is a significant risk of delayed language acquisition for the simple reason that almost every deaf child is born into a family that communicates with spoken language," Higgins says. "Therefore, special care must be taken to ensure that a child acquires a language. ASL rhymes may provide an essential and foundational piece of language learning for deaf children."

Along with a visual demonstration, each sign in ASL-LEX 2.0 comes loaded with other information, like the average age that people learn that sign and other details.

"This [rich set of information about each sign] will hopefully bolster language learners to form their own stories of the signs and have a more informed understanding of ASL," says Anna Lim, a current doctoral student at BU, who was a research assistant in the LexLab, coding phonological descriptions of signs in ASL-LEX. "The database can show users the sorts of variations each sign may have. For example, one can search the sign 'strawberry' and see there are four variations…. This will prevent teachers and learners from becoming too prescriptivist in their approach to language teaching and learning."

At BU, Lim is now in her third year as a PhD student in the Language & Literacy Education program in Wheelock's Deaf Studies Program. As a deaf student, Lim works with Caselli and other Wheelock faculty on projects aimed to assess the vocabulary skills of deaf children.

While sign languages are increasingly popular both in college courses and for young hearing babies, deaf people still fight for the right to learn and use ASL. Even with cochlear implant and hearing technology, many deaf children reach kindergarten with limited or no language skills in any language, spoken or signed, Caselli explains. Hearing parents are often encouraged not to learn ASL by some doctors, audiologists, and speech pathologists out of fears that learning ASL will interfere with their children learning English, an approach she and other researchers see as misguided.

"While sign language is often withheld from deaf people, I had the privilege of learning both English and ASL from the time I was born, as a hearing person. I feel some obligation to make something of that privilege and contribute back to the Deaf community," Caselli says.

ASL-LEX 2.0 could also open new doors to making speech-based technologies more accessible to ASL speakers. Similar databases for spoken languages, for instance, have been critical in the development of speech recognition technologies behind Alexa or Siri, but deaf people have been increasingly excluded from those popular technologies. "It wasn't what we had in mind when we started the project, but I think ASL-LEX could be one part of the solution," Caselli says.

The ASL-LEX 2.0 team already received a second grant from the National Science Foundation to get started building the 3.0 version of ASL-LEX. In the next version, nodes will connect words that not only share similar sign forms, but also when they have related meanings, like the words "cat" and "mouse." The team at BU is hopeful that this next step will advance ASL resources and related language-based technologies even further.