At the Department of Energy's Lawrence Berkeley National Laboratory (Berkeley Lab), we're engineering and deploying sophisticated artificial intelligence tools that make scientific research faster and more efficient. Rapidly advancing AI techniques are also enabling entirely new fields of research that were impossible to conduct with conventional computing.

From particle physics to electronics, our teams are using advanced AI in a variety of subjects to ensure U.S. science and technology remain at the forefront worldwide. These seven projects serve as examples of the broad impact of our expertise.

Building the Lab of the Future

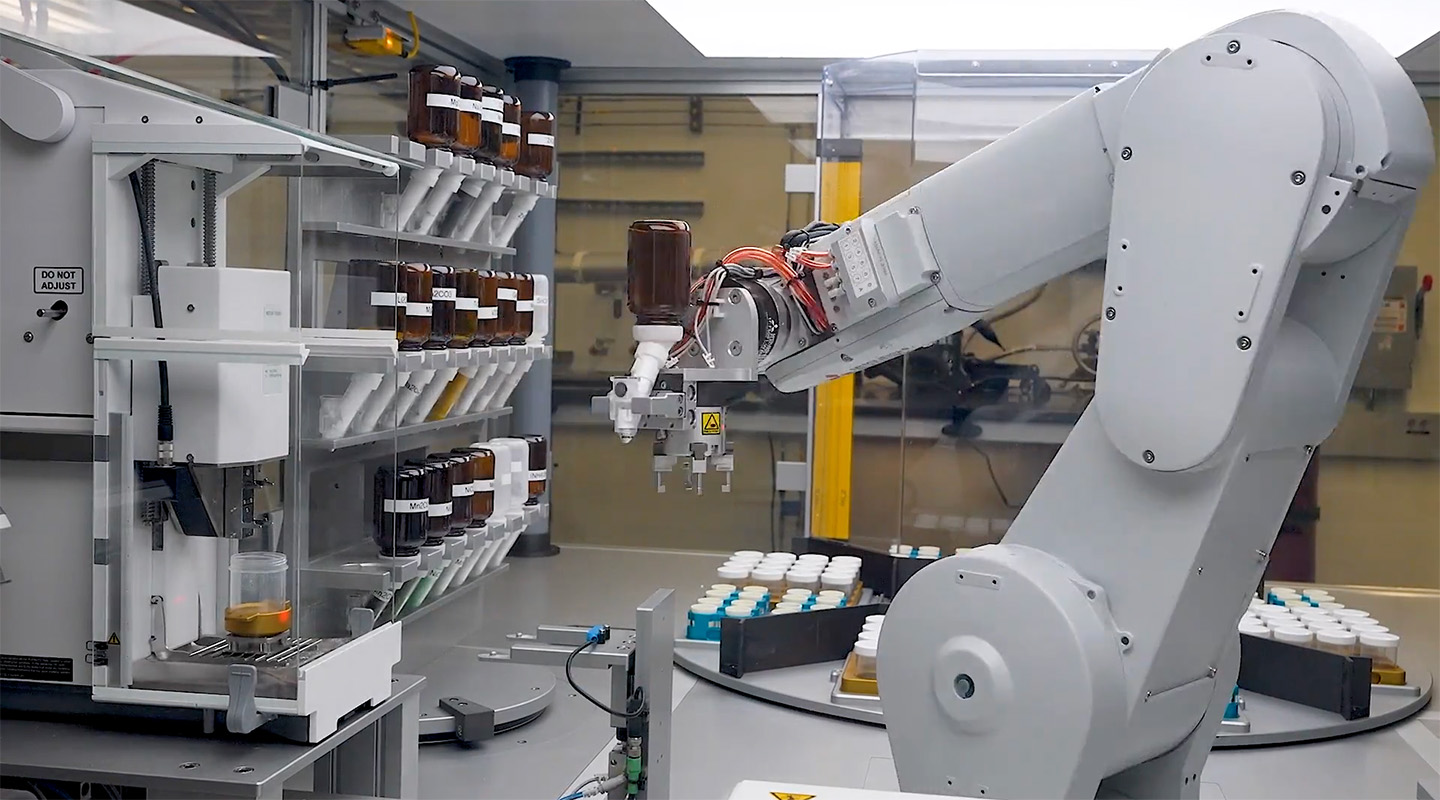

To accelerate development of useful new materials, Berkeley Lab researchers are building a new kind of automated lab that uses robots guided by artificial intelligence.

Scientists have computationally predicted the composition and structure of hundreds of thousands of novel materials that could be promising for technologies such as fuel cells and batteries, but testing to see whether any of those materials can be made in reality is a slow process. Enter A-Lab, which can process 50 to 100 times as many samples as a human every day and use AI to quickly pursue promising finds. A-Lab is designed as a "closed-loop," where decision making is handled without human interference. The system generates chemical recipes by pulling from scientific literature and data from Berkeley Lab's Materials Project and Google DeepMind's GNoME, then its robotic components synthesize the best candidates.

The robots can operate around the clock, freeing researchers to spend more time designing experiments. This integration of theory, data, and automation represents a significant advancement in materials discovery capabilities.

A Faster Pipeline for Biology-Based Products

Staff scientist Héctor García Martín is leading research that merges AI, mathematical modeling, and robotics with synthetic biology, a field focused on designing organisms to meet particular specifications, such as programming healthy cells to attack cancerous ones or engineering a microbe to produce a valuable chemical.

Martín's aim is to speed up the highly iterative processes involved in designing and testing new synthetic biology systems so that new, high-impact products can fill unmet needs in the medical, chemical, and energy industries. His work also expedites the trial-and-error steps needed to grow production of new bio-based compounds from initial small benchtop scales up to commercial scales.

"I think an intense application of AI and robotics/automation to synthetic biology can accelerate synthetic biology timelines 20-fold," said Martín. "We could create a new commercially viable molecule in approximately 6 months instead of about 10 years."

Discovering a Record-Breaking Capacitor Material

Berkeley Lab materials scientists collaborated with four institutions to develop a machine learning technique that can rapidly screen chemicals for use in film capacitors, a crucial component of electronic devices that operate at high temperatures with high power. Traditionally, researchers have needed to explore capacitor chemical candidates through trial and error, synthesizing a few candidates at a time and then characterizing their properties.

The machine learning technique was able to screen a library of nearly 50,000 chemical structures and identify three top candidates in a fraction of the time. Experiments showed one of these compounds has record-breaking performance that will make energy technologies more cost-effective and reliable.

Smarter Studies of Particle Smashing

Particle colliders reveal details of fundamental properties of matter that not only satisfy our curiosity about the universe, but also allow us to build better technologies. Unfortunately, these discoveries can take decades because collision experiments generate enormous datasets, and scientists must parse through the wealth of different measurements that are taken by sensitive detectors every time particles are smashed together.

Our scientists developed a machine learning tool called OmniFold to make the data sorting and analysis process easier, and used it to study the inner structure of protons using data from the HERA (Hadron-Electron Ring Accelerator) collider at the Deutsches Elektronen-Synchrotron (DESY) in Germany.

OmniFold can run analyses that used to take years in mere minutes. It runs on supercomputers like Perlmutter, the flagship system at Berkeley Lab's National Energy Research Scientific Computing Center (NERSC). Perlmutter and other NERSC systems provide world-leading computing resources to support DOE research. The team hopes to apply OmniFold to future particle datasets, including those generated at DOE's Electron-Ion Collider (EIC), which will be built at Brookhaven National Laboratory in partnership with Thomas Jefferson National Accelerator Facility. Berkeley Lab will contribute to the accelerator and detector of the EIC.

In another AI-powered contribution to particle studies, a Berkeley Lab team developed an award-winning machine learning tool that can find hidden patterns in particle accelerator datasets. Their code was developed for (and won) an international particle physics challenge, and has relevant applications in other areas as well, such as the search for transient or anomalous signals in astrophysics research. It now helps physicists find evidence of unknown particles that might otherwise be overlooked when performing traditional analyses.

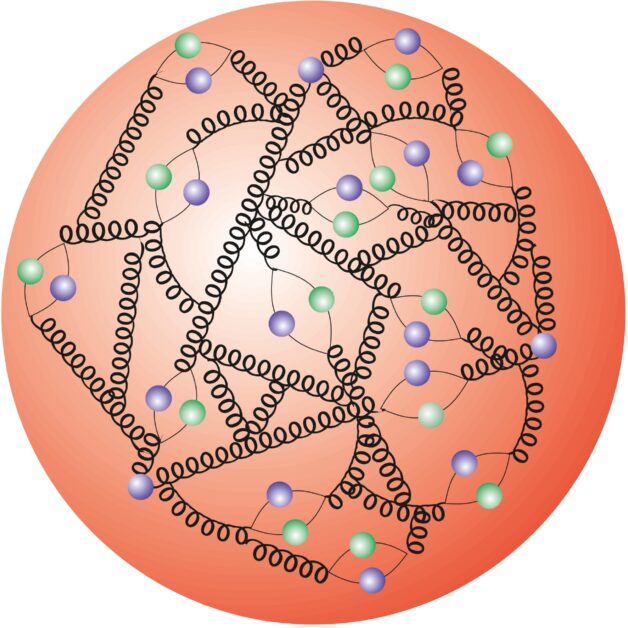

An illustration of a proton with its constituent parts: quarks (shown as green and purple balls in the illustration above) and gluons (illustrated as black coils). Scientists can investigate these parts by studying collisions between electrons and protons.

gpCAM: Revolutionizing Data Collection in Scientific Experiments

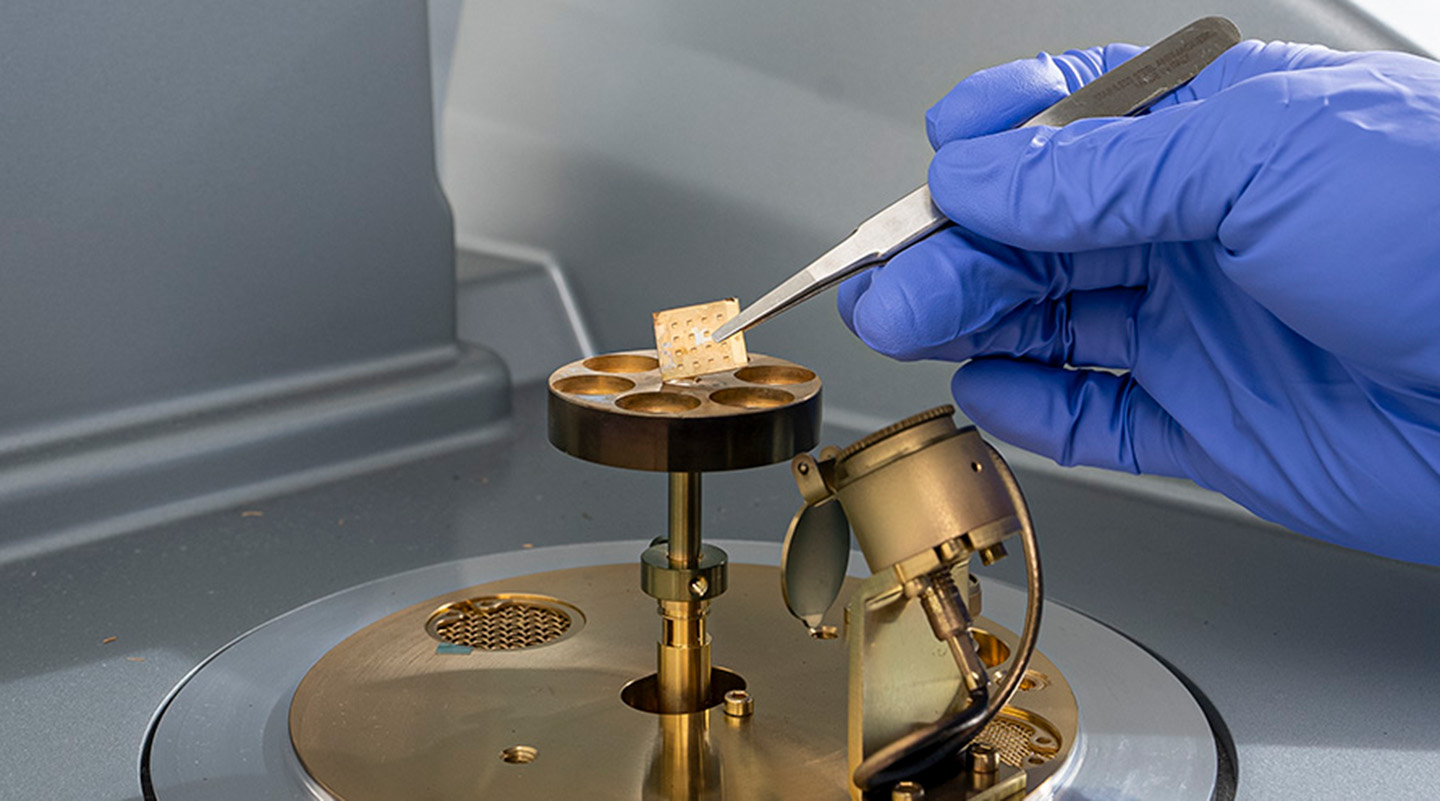

gpCAM is a powerful tool developed by the Center for Advanced Mathematics for Energy Research Applications (CAMERA) at Berkeley Lab that automates the process of collecting and analyzing data in scientific experiments. By using advanced mathematical methods, it helps researchers quickly and accurately predict outcomes and measure uncertainty in large datasets, making experiments faster and more efficient. This automation enhances the overall effectiveness and precision of scientific investigations across various fields. Today, gpCAM is used across the U.S. and Europe for autonomous data acquisition in simulations and experiments.

Recently, a Berkeley Lab team used gpCAM to study the quantum properties of 2D materials, which reduced microscopy imaging time from three weeks down to 8 hours. 2D materials have a variety of applications as components of transistors, semiconductors, batteries, lasers, and camera sensors, as well as uses in drug delivery and water purification. Researchers at Brookhaven National Laboratory and the Institut Laue-Langevin have also leveraged gpCAM to optimize data acquisition in X-ray microscopy and neutron scattering experiments, respectively, significantly speeding up the collection of high-quality data and improving experimental workflows. gpCAM is open source and available to the scientific community. In 2024, it received an R&D 100 Award for its significant contribution to scientific research and innovation.

Machine Learning Software Enhances Molecular Modeling

Researchers at Berkeley Lab played a key role in developing DeePMD-kit, a machine-learning-based software package that dramatically improves the simulations used to characterize atomic processes. This breakthrough, which won the 2020 Association for Computing Machinery Gordon Bell Prize, allows scientists to study complex chemical processes like protein folding, combustion, and materials behavior at sizes and timescales previously out of reach, significantly accelerating research in materials science, chemistry, and biology.

DeePMD-kit uses neural networks trained on datasets of the fundamental physics of atomic interactions. When run on a supercomputer, such as DOE Oak Ridge National Lab's Summit system, the software can simulate reactions involving more than 100 million atoms without losing accuracy, which is about ten times more particles than existing modeling programs can handle when run on top-of-the-line supercomputers. In addition to modeling more realistic numbers of atoms, the software also gives scientists more information with each analysis by simulating longer reaction times.

DeePMD-kit software is now available open source to the research community, paving the way for more efficient, AI-driven scientific discovery.

Machine Learning at the Control Panel

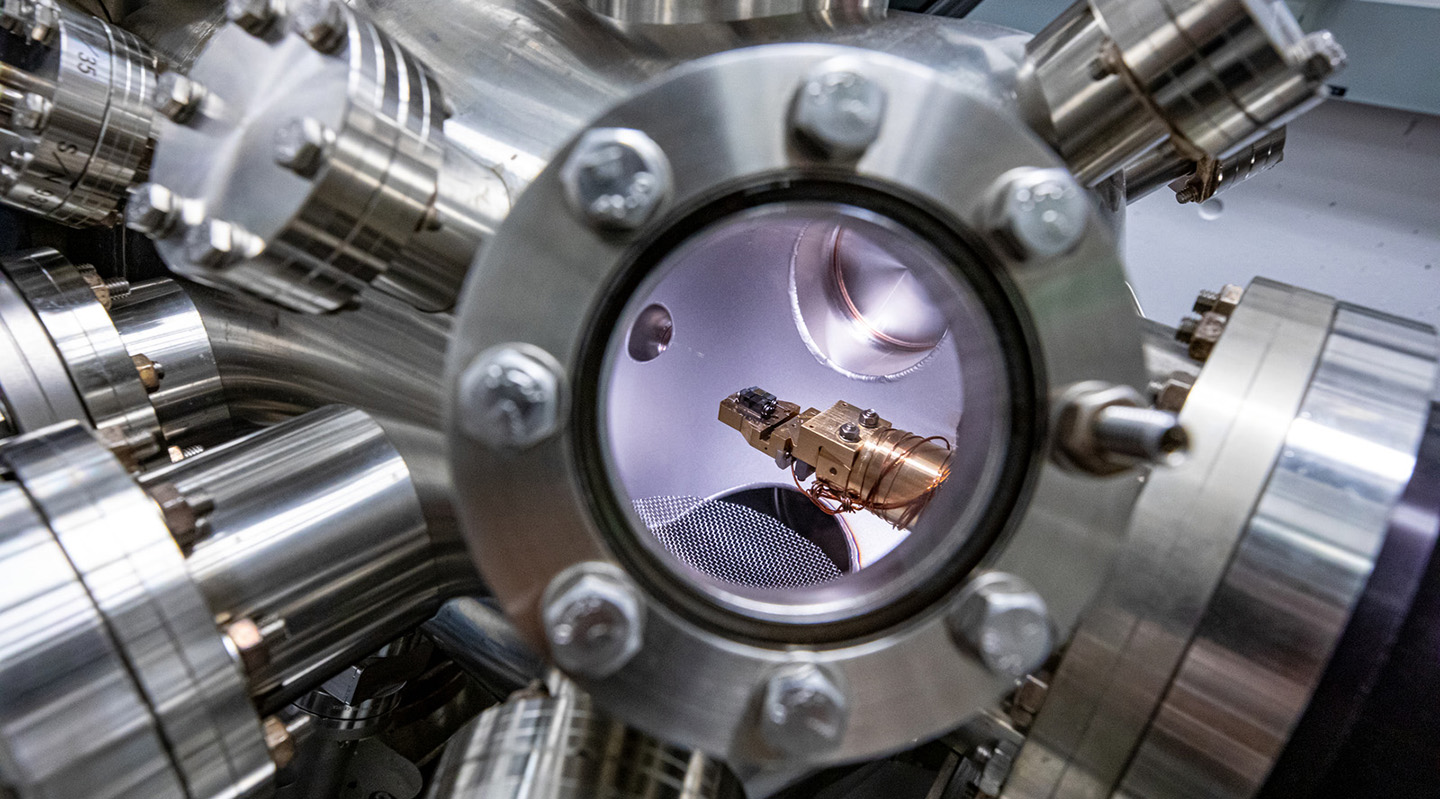

Our researchers co-developed a machine learning algorithm called GPTune to optimize control of the ions moving at nearly the speed of light in Brookhaven National Laboratory's Relativistic Heavy Ion Collider (RHIC). The RHIC accelerates gold atoms that have been stripped of their electrons, precisely guiding two beams of the particles in opposite directions along a circular path, and aiming them for maximum collisions. Studying these crashes - which produce streams of subatomic particles - can reveal new insights into fundamental physics.

Packing atoms into focused, intersecting beams is so complex – involving many control parameters – that the optimal way to do it isn't fully known ahead of time. Machine learning is an excellent resource for such situations. GPTune was trained on datasets from the collider, then used to set 45 operating parameters. After refinement, it was able to significantly increase the collider's beam intensity and precision. The tool can now be applied to a variety of scientific instruments.