A team led by researchers at the California NanoSystems Institute at UCLA created an artificial intelligence-based tool to assist an emerging technology for digital pathology. AI systems are currently being trained to paint microscopic images of transparent tissue samples that physicians examine for diagnosis, in a process called virtual staining. In the study, the researchers report on an AI of their own to detect potentially life-threatening errors, called realistic hallucinations, that occasionally come from virtual staining AI models — and autonomously detect those errors without using slides stained correctly by human experts.

This AI technique is called autonomous quality and hallucination assessment tool for virtually stained tissue images, or AQuA. With a set of virtually stained images of human kidney and lung samples, the new tool had 99.8% accuracy telling the difference between images with errors and those without. AQuA detected realistic hallucinations that were missed by board-certified pathologists who reviewed the same stained images. In other experiments, the AI detected hallucinations of different types than included in the data used to train it, as well as errors in images stained by human lab technicians.

BACKGROUND

For pathologists to identify cancer and other diseases based on biopsies, the nearly transparent samples of thin tissue sections must be stained with special dyes, a practice applied for over a century in medicine. In the years ahead, generative AI systems are expected to emulate the chemical staining process in a way that's faster, cheaper and more efficient.

Labs can take hours, and sometimes up to a day, to stain a sample; AI algorithms could virtually stain a microscopic image in less than a minute. AI results would also be more consistent than the work of human experts, which can vary by country and region, between labs, and even among the work of a single technician. Staining in labs is labor-intensive, uses up expensive chemicals and produces millions of gallons of toxic wastewater each year, so virtual staining could also save on costs while being carbon neutral. And virtual staining doesn't deplete tissue samples, offering the potential to reduce or even eliminate repeat biopsies.

However, generative AI carries the risk of hallucinations. In the same way that a chatbot sometimes states false information as fact, a virtual staining AI might occasionally hallucinate structures that aren't present in the actual sample. Realistic hallucinations can be so convincing that they fool pathologists to believe that the tissue sample is stained very well. In reality, such a hallucination shows tissue structures that belong to an entirely imaginary patient.

With detecting diseases such as cancer or transplant rejection, the stakes are monumental. False positives can lead to unnecessary treatments, while unnoticed tumors or organ rejection can lead to catastrophic health problems and even death. Because pathology results help guide choices for therapy, evaluation errors due to hallucinations could sabotage a patient's treatment plan.

METHOD

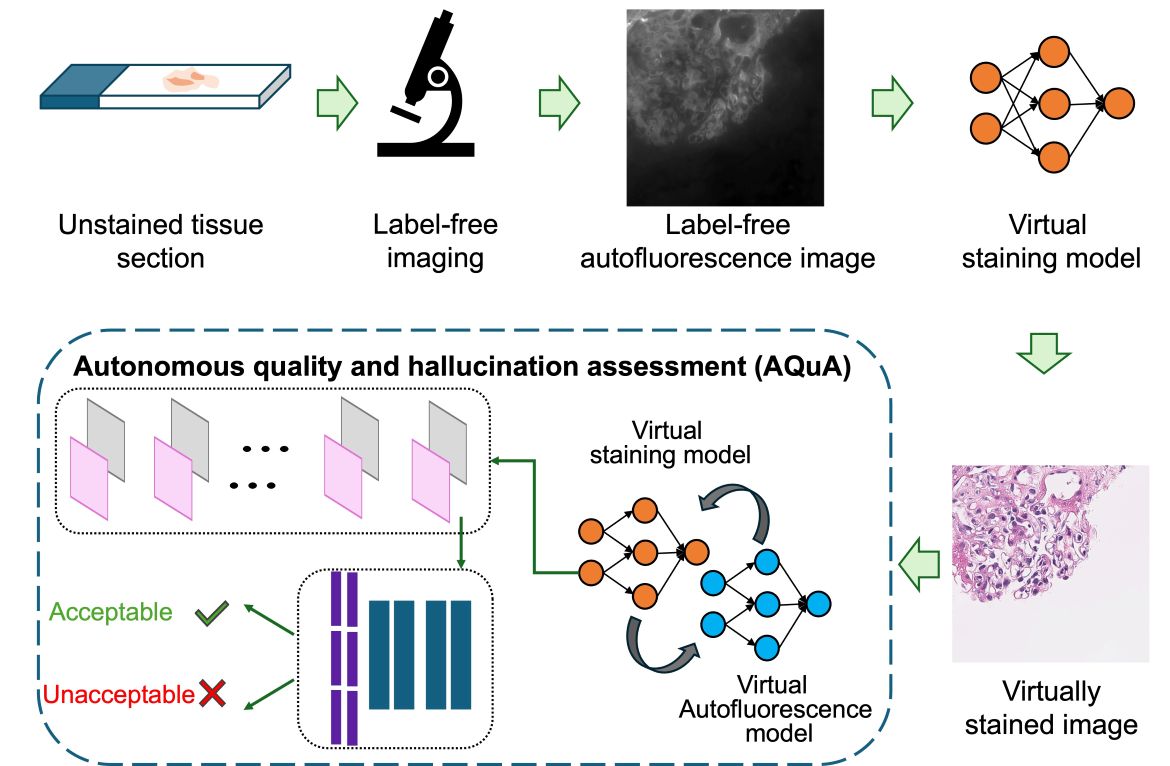

AQuA is a machine learning system designed to resemble the network of neurons in the human brain. Starting with a set of unstained microscopic images of human tissue samples, the AQuA model assessed changes in key microscopic measurements as the images cycled back and forth between a virtual staining algorithm and an algorithm that reverses the process to digitally generate unstained images. In the process, AQuA "learned" to connect virtual staining to the original microscopic image of the unprocessed tissue sample and tell the difference between correct and hallucinated images.

IMPACT

When virtual staining AI models make their way to use in the clinic, the UCLA researchers' system could serve as an essential gatekeeper ensuring that pathologists make potentially life-and-death judgments based on accurate pathology images. AQuA might also be extended to test and periodically certify virtual staining models as part of a digital pathology workflow. Another crucial application could be protecting against cyberattacks that purposely add errors to virtually stained images in order to inject chaos into a health system.

AUTHORS

The study's corresponding author is Aydogan Ozcan, the Volgenau Professor of Engineering Innovation and professor of electrical and computer engineering and of bioengineering in the UCLA Samueli School of Engineering, the CNSI's associate director for entrepreneurship, industry and academic exchange, and a professor with the Howard Hughes Medical Institute. The first author is Luzhe Huang, who earned a Ph.D. from UCLA in 2024. Other co-authors are Yuzhu Li and Nir Pillar, both of UCLA; Tal Keidar Haran of Hadassah Hebrew University Medical Center in Israel; and William Dean Wallace of USC.

DISCLOSURES

The technology described in this study is covered by a patent application filed by the UCLA Technology Development Group on behalf of the Regents of the University of California, with co-authors Ozcan, Huang and Pillar listed as inventors.

JOURNAL

The study was published in the journal Nature Biomedical Engineering.

DOI: 10.1038/s41551-025-01421-9

FUNDING

The study was supported by UCLA Samueli's V. M. Watanabe Excellence in Research Award.