People accept human nurses overruling patient autonomy and deciding on forced medication, but this trust does not extend to nursing robots.

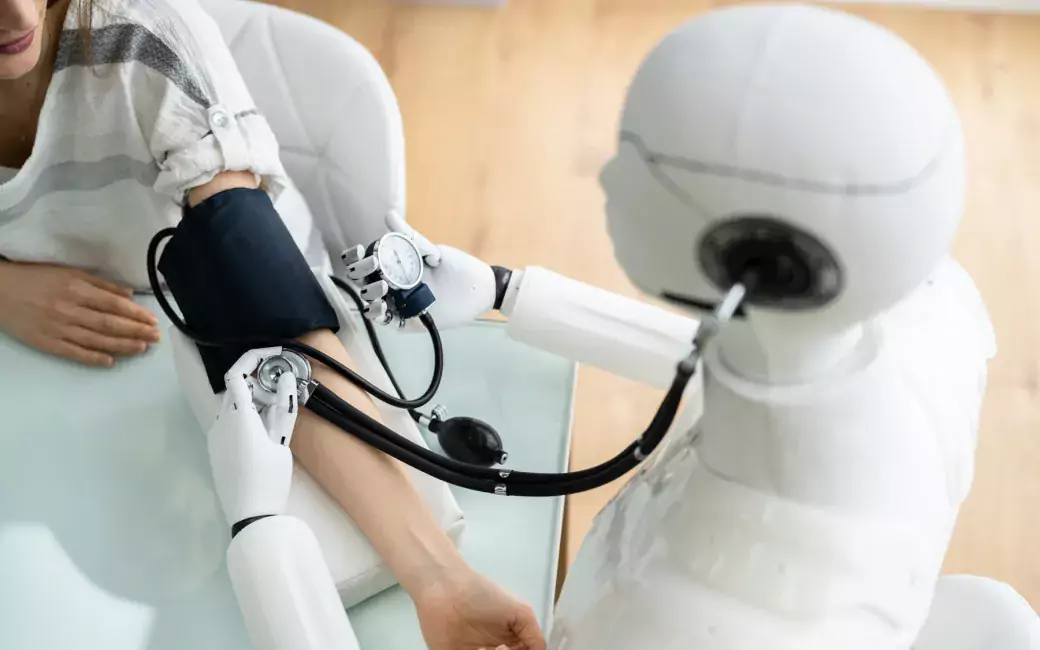

A study recently completed at the University of Helsinki investigated people's judgments towards decisions made by nursing robots.

Based on the study, it seems that human sensitivity and empathy are required from AI-based medical applications in order for patients to consider their solutions and decisions as equally acceptable as those made by humans.

"Research in moral psychology has paid little attention to patient autonomy or internal conflicts in medical ethics - especially from the perspective of having robots as actors," says researcher Michael Laakasuo.

According to Laakasuo, the goal of the study was to expand the field of experimental moral psychology towards the examination of medical ethics.

People not convinced by the moral responsibility of nursing robots

As an element of the study, study subjects assessed the morality of medical decisions made in a fictional story. People were accepting of both a robot or a human nurse making a decision not to comply with a chief physician's instructions to medicate a patient against their will. In contrast, they found it unacceptable for the nursing robot to overrule the will of the patient by medicating them forcefully, although a similar decision by the human nurse was accepted.

"The question pertaining to forced medication revealed that decisions made by robot nurses and human nurses are not treated in the same way even if they have identical consequences," Laakasuo says.

"What may be the most worrying finding is that when the story was changed to have the patient die of a sudden bout of illness during the night, the human was perceived to be more morally responsible for the patient's fate than the nursing robot, although there was no connection between the therapeutic decision and the patient's death."

Robots are not a simple solution to the lack of human resources

"The findings could provide useful perspectives on the development of smart medical applications and robotics," Laakasuo muses.

The healthcare sector is struggling with a global shortage of nurses. The results of the study indicate that this lack of resources cannot be comprehensively solved with the help of machines - people still want to have other people care for them in the future.

"In terms of further research, the findings open up new avenues for research on moral cognition and on human-robot interaction, particularly in the field of medical ethics," says Laakasuo.