PlantSeg complements microscopy methods for better understanding tissue development

Imagine working on a jigsaw puzzle with so many pieces that even the edges seem indistinguishable from others at the puzzle's centre. The solution seems nearly impossible. And, to make matters worse, this puzzle is in a futuristic setting where the pieces are not only numerous, but ever-changing. In fact, you not only must solve the puzzle, but parse out how each piece brings the picture wholly into focus.

That's the challenge molecular and cellular biologists face in sorting through cells to study the origins of the structures in an organism and the way it develops, known as morphogenesis. If only there was a tool that could help. A recent eLife Sciences paper shows there now is.

An EMBL research group led by Anna Kreshuk, a computer scientist and expert in machine learning, joined the DFG-funded FOR2581 consortium of plant biologists and computer scientists to develop a tool that could solve this cellular jigsaw puzzle. Starting with computer code and moving on to a more user-friendly graphical interface called PlantSeg, the team built a simple open-access method to provide the most accurate and versatile analysis of plant tissue development to date. The group included expertise from EMBL, Heidelberg University, the Technical University of Munich, and the Max Planck Institute for Plant Breeding Research in Cologne.

"Building something like PlantSeg that can take a 3D perspective of cells and actually separate them all is surprisingly hard to do, considering how easy it is for humans," Kreshuk says. "Computers aren't as good as humans when it comes to most vision-related tasks, as a rule. With all the recent development in deep learning and artificial intelligence at large, we are closer to solving this now, but it's still not solved - not for all conditions. This paper is the presentation of our current approach, which took some years to build."

If researchers want to look at morphogenesis of tissues at the cellular level, they need to image individual cells. Lots of cells means they also have to separate or 'segment' them to see each cell individually and analyse the changes over time.

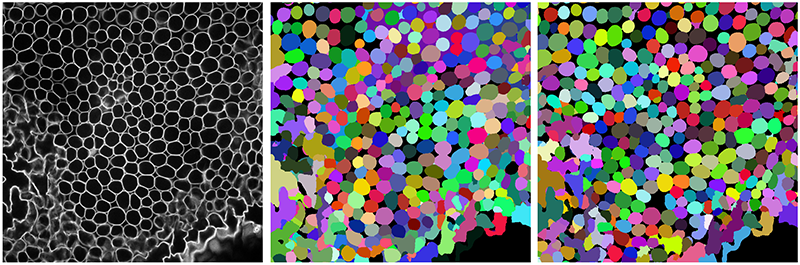

"In plants, you have cells that look extremely regular that in a cross-section look like rectangles or cylinders," Kreshuk says. "But you also have cells with so-called 'high lobeness' that have protrusions, making them look more like puzzle pieces. These are more difficult to segment because of their irregularity."

Kreshuk's team trained PlantSeg on 3D microscope images of reproductive organs and developing lateral roots of a common plant model, Arabidopsis thaliana, also known as thale cress. The algorithm needed to factor in the inconsistencies in cell size and shape. Sometimes cells were more regular, sometimes less. As Kreshuk points out, this is the nature of tissue.

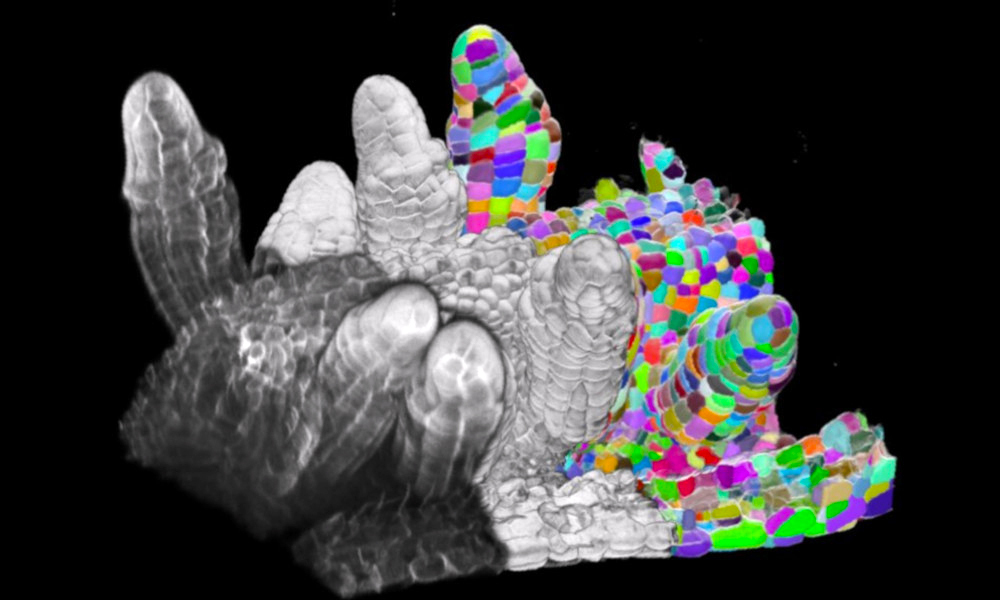

A beautiful side of this research came from the microscopy and images it provided to the algorithm. The results manifested themselves in colourful renderings that delineated the cellular structures, making it easier to truly see segmentation.

"We have giant puzzle boards with thousands of cells and then we're essentially colouring each one of these puzzle pieces with a different colour," Kreshuk says.

Plant biologists have long needed this kind of tool, as morphogenesis is at the crux of many developmental biology questions. This kind of algorithm allows for all kinds of shape-related analysis, for example analysis of shape changes through development or under a change in environmental conditions, or between species. The paper gives some of those examples, such as characterising developmental changes in ovules, studying the first asymmetric cell division, which initiates the formation of the lateral root, and comparing and contrasting the shape of leaf cells between two different plant species.

While this tool currently targets plants specifically, Kreshuk points out that it could be tweaked to be used for other living organisms as well.

Machine learning-based algorithms, like the ones used at the core of PlantSeg, are trained from correct segmentation examples. The group has trained PlantSeg on many plant tissue volumes, so that now it generalises quite well to unseen plant data. The underlying method is, however, applicable to any tissue with cell boundary staining and it could easily be retrained for animal tissue.

"If you have tissue where you have a boundary staining, like cell walls in plants or cell membranes in animals, this tool can be used," Kreshuk says. "With this staining and at high enough resolution, plant cells look very similar to our cells, but they are not quite the same. The tool right now is really optimised for plants. For animals, we would probably have to retrain parts of it, but it would work."

Currently, PlantSeg is an independent tool (available here) but one that Kreshuk's team will eventually merge into another tool her lab is working on, ilastik Multicut workflow.

Related links

Tags: 3D segmentation, Machine Learning, plant biology